All the compute – none of the complexity

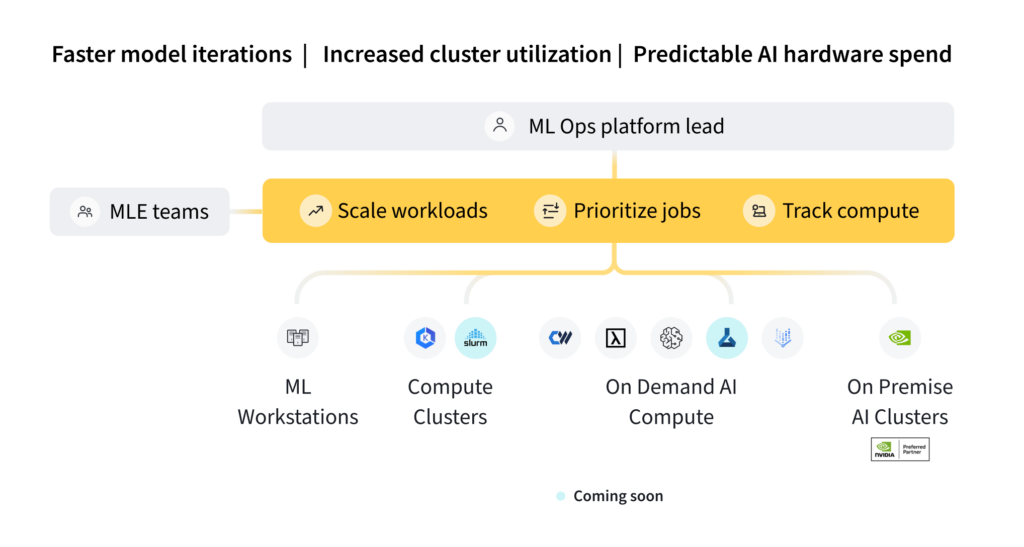

Launch a job into any target environment to dramatically scale ML training workloads from local machine to distributed compute. Easily access external environments, better GPUs and clusters to increase the speed and predictable scale of your ML workflow.

Bridge ML practitioners and MLOps

Remove org silos with one common interface. Practitioners get the compute they need with all the infrastructure complexity abstracted away, while MLOps maintains oversight and observability across the infrastructure environments they manage. Work collaboratively to scale up and out ML activities.

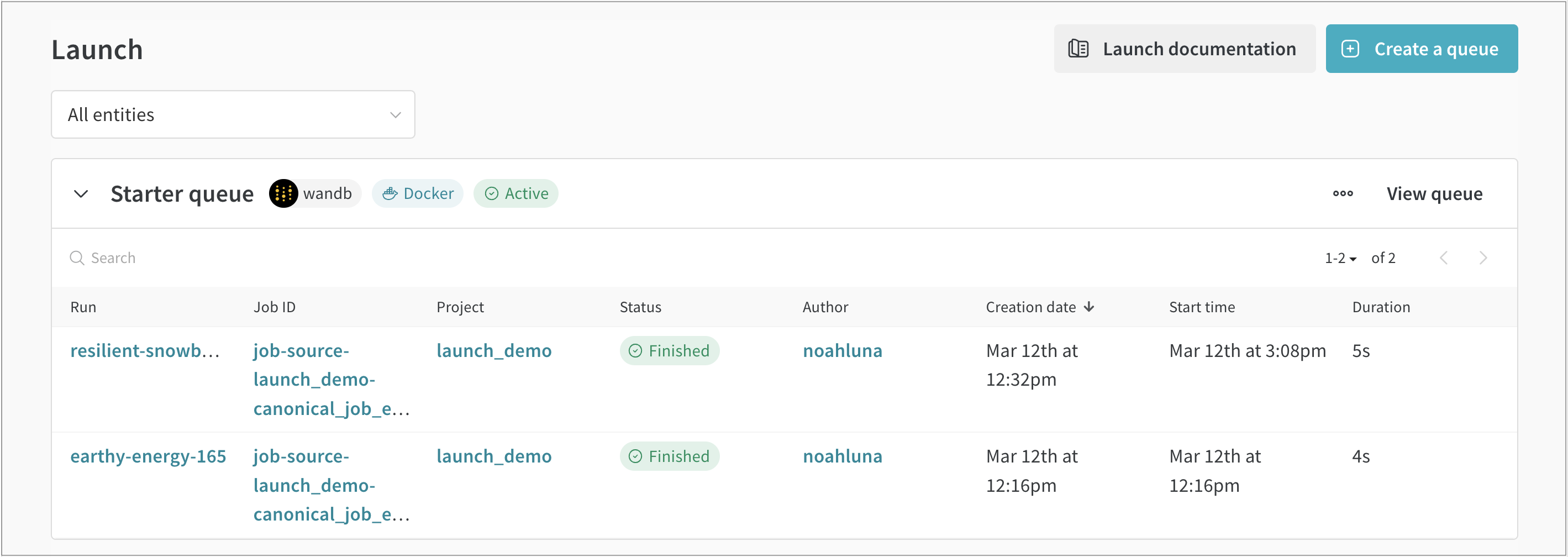

Easily run continuous integration and evaluation jobs

Drastically reduce your cycle time to deploy approved models to production inference environments. Evaluate your models more often and more thoroughly and continuously, with the ability to reproduce runs with just one click. Use Sweeps on Launch to easily tune knobs and change hyperparameters before re-running jobs.

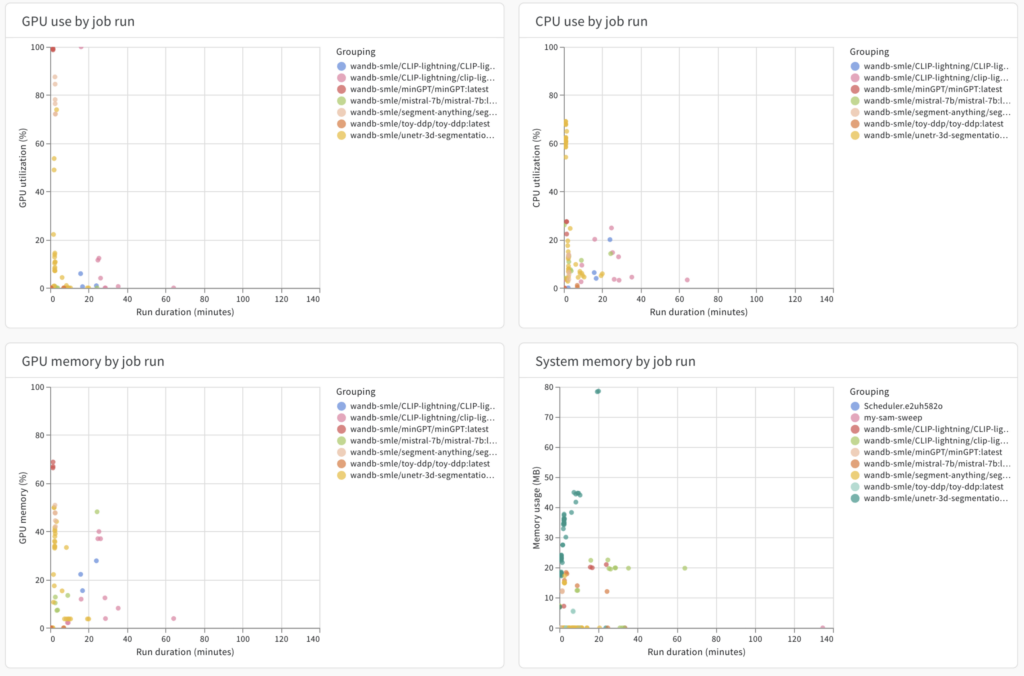

Improved observability for ML Engineers

Increase visibility into how your ML infra budget is being used…or not being utilized. Rationalize your spend on significant infrastructure investments, and set better defaults to ensure optimal and efficient use of those resources.

Scale your ML workflows today with W&B Launch

The Weights & Biases platform helps you streamline your workflow from end to end

Models

Experiments

Track and visualize your ML experiments

Sweeps

Optimize your hyperparameters

Model Registry

Register and manage your ML models

Automations

Trigger workflows automatically

Launch

Package and run your ML workflow jobs

Weave

Traces

Explore and

debug LLMs

Evaluations

Rigorous evaluations of GenAI applications