How to Track and Compare Audio Transcriptions with Whisper and Weights & Biases

Overview

How well and how quickly does Whisper transcribe text? In this article, we’ll use Whisper, OpenAI’s open source automatic speech recognition library (ASR), to transcribe 75 episodes of Gradient Dissent, the machine learning podcast by Weights & Biases.

OpenAI released a speech recognition neural net called Whisper. The model was trained on 680k hours of multilingual training data and supports 99 languages with varying quality. There are multiple projects based on Whisper such as a C++ implementation of the model inference which is a high-performance inference for Whisper's model with dependencies that can run on multiple cores on Apple Silicon (e.g., M1).

We’ll compare three Whisper model sizes: tiny (39M parameters), base (74M parameters), and large (1550M parameters), and evaluate 2 things:

- Relative transcription speed of different model sizes on GPUs for the Python implementation of Whisper

- Relative transcription speed of different model sizes on CPUs for the C++ implementation of Whisper

Then, we’ll show how to use Weights & Biases (W&B) as a single source of truth that helps track and visualize all of these experiments.

Preview of project outcomes

The table below is a transcription of the first ~70 seconds of Phil Brown — How IPUs are Advancing Machine Intelligence, an interview between Phil Brown (Director of Applications at Graphcore, at the time of recording) and Lukas Biewald (CEO and co-founder of Weights & Biases).

Each row is a 3-7 second audio snippet, with the corresponding transcriptions produced by the tiny, base, and large Whisper models.

In this article, we show you how to

- log experiments from outside Python, namely from C++ or bash script running in the command line to W&B

- run a line-based comparison of audio snippets with their respective transcription in a W&B table

Bonus

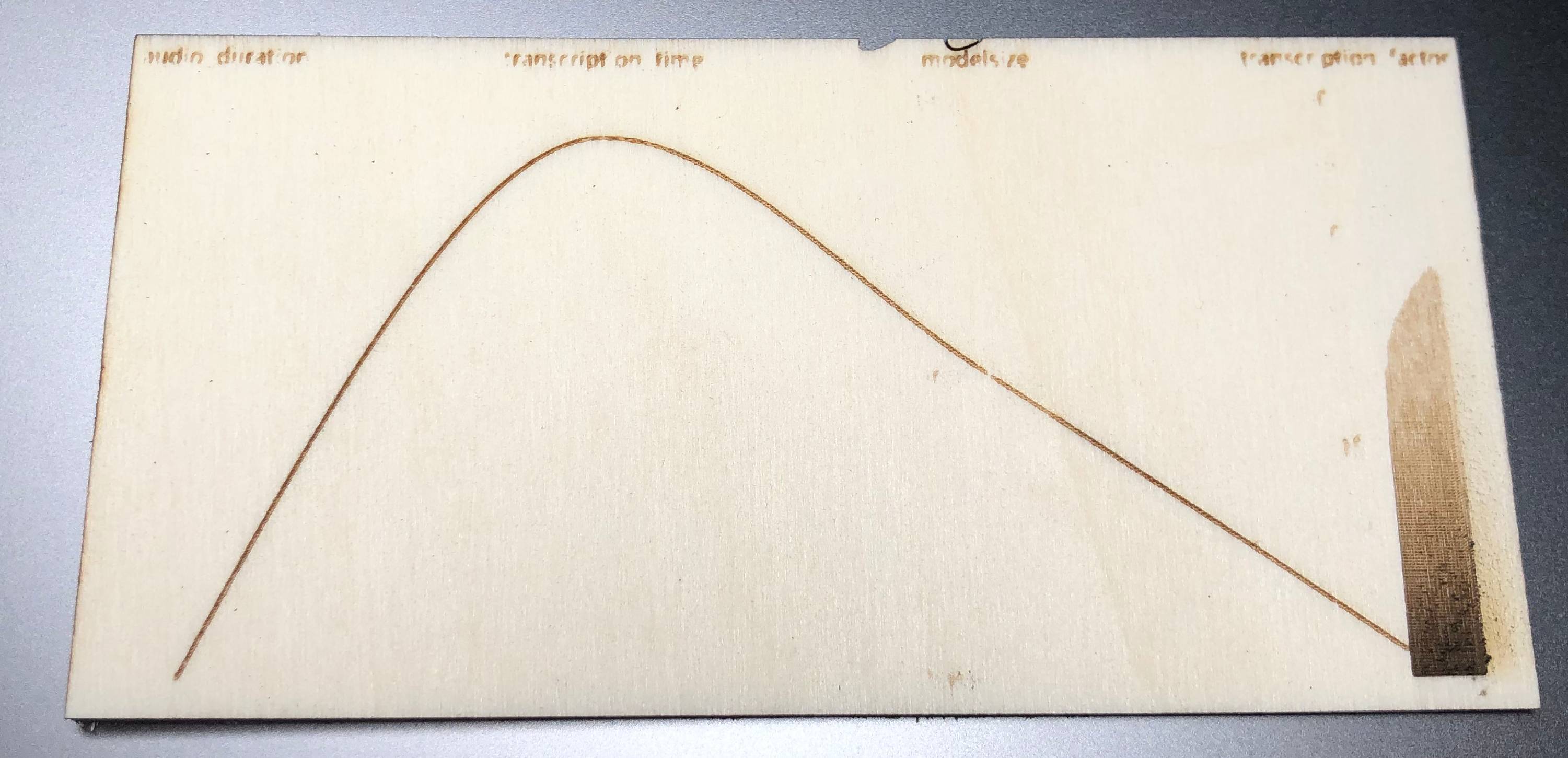

Here's a laser cut version 🔥 of a W&B parallel coordinates plot for the large model!

The Colab

If you'd like to follow along, you can find code for Whisper in Python and Whisper.cpp in C++ in the Colab:

The code, as a One-liner

Let's get started to see Whisper in Action. We run this code:

whisper chip-huyen-ml-research.mp3 --model tiny --language=en

1️⃣ Collect Data

At first, we collect the data. We use yt-dlp to download all tge episodes from the Gradient Dissent Youtube playlist.

Installation

# Install yt-dlp

python3 -m pip install -U yt-dlp

## Download all episodes as MP3 files

yt-dlp -i -x --yes-playlist --audio-format mp3 https://www.youtube.com/playlist?list=PLD80i8An1OEEb1jP0sjEyiLG8ULRXFob_

This is an example of how the downloaded and renamed mp3 files look like in W&B Artifacts.

2️⃣ Install dependencies

Then, we install the libraries that we need to transcribe the fresh data.

At first, we install the code that we need to transcribe the fresh data.

wandb: We track experiments and version our models with Weights & Biaseswhisper: We transcribe audio files with Whispersetuptools-rust: Used by Whisper for tokenizationffmpeg-python: Used by Whisper for down-mixing and resampling

Installation and Initialization

!pip install wandb -qqq

!pip install git+https://github.com/openai/whisper.git -qqq

!pip install setuptools-rust

!pip install ffmpeg-python -qqq

import wandb

wandb.login(host="https://api.wandb.ai")

from google.colab import drive

drive.mount('/content/gdrive')

3️⃣ Transcribe a single file

This is a simple example how to use Whisper in Python.

import whisper

model = whisper.load_model("base")

result = model.transcribe("audio.mp3")

print(result["text"])

4️⃣ Track a minimalistic experiment

This is a simple example how to use W&B in Python.

import wandb

with wandb.init(project="gradient-dissent-transcription"):

train_acc = 0.7

train_loss = 0.3

wandb.log({'accuracy': train_acc, 'loss': train_loss})

5️⃣ Version files with W&B artifacts

with wandb.init(project="gradient-dissent-transcription"):

transcript = wandb.Artifact('transcript', type="transcription")

transcript.add_file('transcript.txt')

run.log_artifact(transcript)

6️⃣ Apply steps 3️⃣ + 4️⃣ + 5️⃣ to audio collection

import whisper

import wandb

from pathlib import Path

import time

import ffmpeg

# Specify project settings

PROJECT_NAME="gradient-dissent-transcription"

JOB_DATA_UPLOAD="data-upload"

JOB_TRANSCRIPTION="transcription"

ARTIFACT_VERSION="latest"

AUDIOFORMAT = 'mp3'

MODELSIZE = 'tiny' # tiny, base, small, medium, large

# Load the Whisper model to perform transcription

model = whisper.load_model(MODELSIZE)

# Inititialize W&B Project

run = wandb.init(project=PROJECT_NAME, job_type=JOB_TRANSCRIPTION)

# Download raw Gradient Dissent audio data from W&B Artifacts

artifact = run.use_artifact('hans-ramsl/gradient-dissent-transcription/gradient-dissent-audio:'+ARTIFACT_VERSION, type='raw_audio')

artifact_dir = artifact.download(root="/content/")

wandb.finish()

# Path to all audio files in `artifact_dir`

audiofiles = Path(artifact_dir).glob('*.'+AUDIOFORMAT)

# Iterate over all audio files

for audio_file in audiofiles:

# Specify the path to where the audio file is stored

path_to_audio_file = str(audio_file)

# Measure start time

start = time.time()

# Transcribe. Here is where the heavy-lifting takes place. 🫀🏋🏻

result = model.transcribe(path_to_audio_file)

# Measure duration of transcription time

transcription_time = time.time()-start

# Define a path to transcript file

path_to_transcript_file = path_to_audio_file + '.txt'

# Specify parameters to be logged

duration = ffmpeg.probe(path_to_audio_file)['format']['duration']

transcription_factor = float(duration) / float(transcription_time)

# Save transcription to local text file

with open(path_to_transcript_file, 'w') as f:

f.write(result['text'])

transcript_text = result['text']

# Log the local transcript as W&B artifact

with wandb.init(project=PROJECT_NAME, job_type=JOB_TRANSCRIPTION) as run:

# Build a transcription table

transcript_table = wandb.Table(columns=['audio_file', 'transcript', 'audio_path', 'audio_format', 'modelsize', 'transcription_time', 'audio_duration', 'transcription_factor'])

# Add audio file, transcription and parameters to table

transcript_table.add_data(wandb.Audio(path_to_audio_file), transcript_text, path_to_audio_file, AUDIOFORMAT, MODELSIZE, transcription_time, duration, transcription_factor)

transcript_artifact = wandb.Artifact('transcript', type="transcription")

transcript_artifact.add(transcript_table, 'transcription_table')

# Log artifact including table to W&B

run.log_artifact(transcript_artifact)

# Log parameters to Runs table (Experiment Tracking)

run.log({

'transcript': transcript_text,

'audio_path': path_to_audio_file,

'audio_format': AUDIOFORMAT,

'modelsize': MODELSIZE,

'transcription_time': transcription_time,

'audio_duration': duration,

'transcription_factor': transcription_factor

}

)

🤓 💻 If you want see the C++ code we use, find it in the Colab.

Here is a short preview of the command line usage:

#!/bin/bash

input_file="$1"

model_size="$2"

cores="$3"

output_file=$(realpath "${input_file%.*}.wav")

yes | ffmpeg -hide_banner -loglevel error -i $input_file -acodec pcm_s16le -ac 1 -ar 16000 $(realpath $output_file)

exec /Users/hans/code/whisper.cpp/main -m /Users/hans/code/whisper.cpp/models/ggml-$model_size.bin -l en -t $cores -f $(realpath $output_file) > /Users/hans/code/whisper.cpp/transcriptions/temp-transcript.txt

After successfully running steps 1️⃣ - 6️⃣, we can now visualize the results and learn.

7️⃣ Compare Model Sizes

W&B makes it really easy to analyze the performance of those models. During experiment tracking, we tracked parameters such as transcription_factor or transcript. You can see that the highest relative speed is 55.158 and the lowest 1.199. Why is there such a huge difference in speed performance? Let's find out.

Relative Speed Analysis

One episode, many transcriptions

To find out why there is such a variance, we'll look at one specific episode, Pete Warden — Practical Applications of TinyML. We transcribed this episode with different model sizes of Whisper, both on the original Python version on GPU and usinge the C++ port (Whisper.cpp):

- 🚤

tiny - 🛥️

base - 🚢

large

In the next figure, the Python on GPU is green 🟢 and the C++ on CPUs (Mac M1, 8 Cores) is blue 🔵.

The GPU used here is the NVIDIA A100 with a max power consumption of 400 W and the CPUs of the M1 Pro have a max power consumption of 68.5W.

The CPU energy consumption 78% lower.

🚢 Large Models

For large models we can see that there is a low variance in transcription speed. The range is from 1.199 to 2.042 or 1.7x speed Δ.

🛥️ Base Models

For base models we can see that there is a little higher variance in transcription speed. The range is from 9.953 to 21.445 or 2.15x speed Δ.

🚤 Tiny Models

For tiny models we can see that there is a very high variance in transcription speed. The range is from 5.819 to 50 or 8.6x speed Δ.

Model Performance

This parallel coordinates plot shows how the different model sizes perform in terms of transcription time and relative transcription speed.

Model Performance (by model size)

This grouped parallel coordinates plot summarizes model performance and shows that tiny models have a transcription factor of ~ 38x on average, base models ~18x and large models ~ 1.6x

Access all transcript files

All episodes that have been transcribed can be seen below.