How to Track and Compare Audio Transcriptions With Whisper and Weights & Biases

In this article, we learn how to use OpenAI Whisper to transcribe a collection of audio files, and easily keep track of the progress with Weights & Biases.

Created on February 3|Last edited on May 7

Comment

In case you're not familiar with Whisper, it's an OpenAI’s open-source automatic speech recognition library (ASR). Whisper was trained on 680,000 hours of multilingual training data and supports 99 languages with varying quality.

Here's what we'll be covering in this article:

Table of Contents

Using Whisper To Transcribe a PodcastAdded BonusThe ColabThe Code, in Python1️⃣ Collect Data2️⃣ Install Dependencies3️⃣ Transcribe a single file4️⃣ Track a minimalistic experiment5️⃣ Version Files With W&B Artifacts6️⃣ Apply Steps 3️⃣ + 4️⃣ + 5️⃣ to Audio CollectionThe Code, in C++7️⃣ Compare Model SizesCost Analysis and Conclusion

Using Whisper To Transcribe a Podcast

Today, we're going to experiment with how it performs transcribing 75 episodes of Gradient Dissent, the machine learning podcast by Weights & Biases. Specifically, we'll compare three Whisper model sizes: tiny (39M parameters), base (74M parameters), and large (1550M parameters), and evaluate two things:

- Relative transcription speed of different model sizes on GPUs for the Python implementation of Whisper

- Relative transcription speed of different model sizes on CPUs for the C++ implementation of Whisper

Then, we’ll show how to use Weights & Biases (W&B) as a single source of truth that helps track and visualize all these experiments. In more detail, we will:

- log experiments from outside Python, namely from C++ or bash script running in the command line to W&B.

- run a line-based comparison of audio snippets with their respective transcription in a W&B table.

Did you know that you can track experiment results from C++ or any other language running in a bash script? We'll show you how by using `subprocess`.

💡

Here's what we'll be covering:

A teaser of our project's outcome

The table below is a transcription of the first ~70 seconds of Phil Brown — How IPUs are Advancing Machine Intelligence, an interview between Phil Brown (Director of Applications at Graphcore at the time of recording) and Lukas Biewald (CEO and Co-founder of Weights & Biases).

Each row is a 3-7 second audio snippet, with the corresponding transcriptions produced by the tiny, base, and large Whisper models.

Take a look at rows 15 and 16! ▶️ Play the audio and compare the transcripts. What do you think about the quality of the different model sizes?

💡

Run set

470

Added Bonus

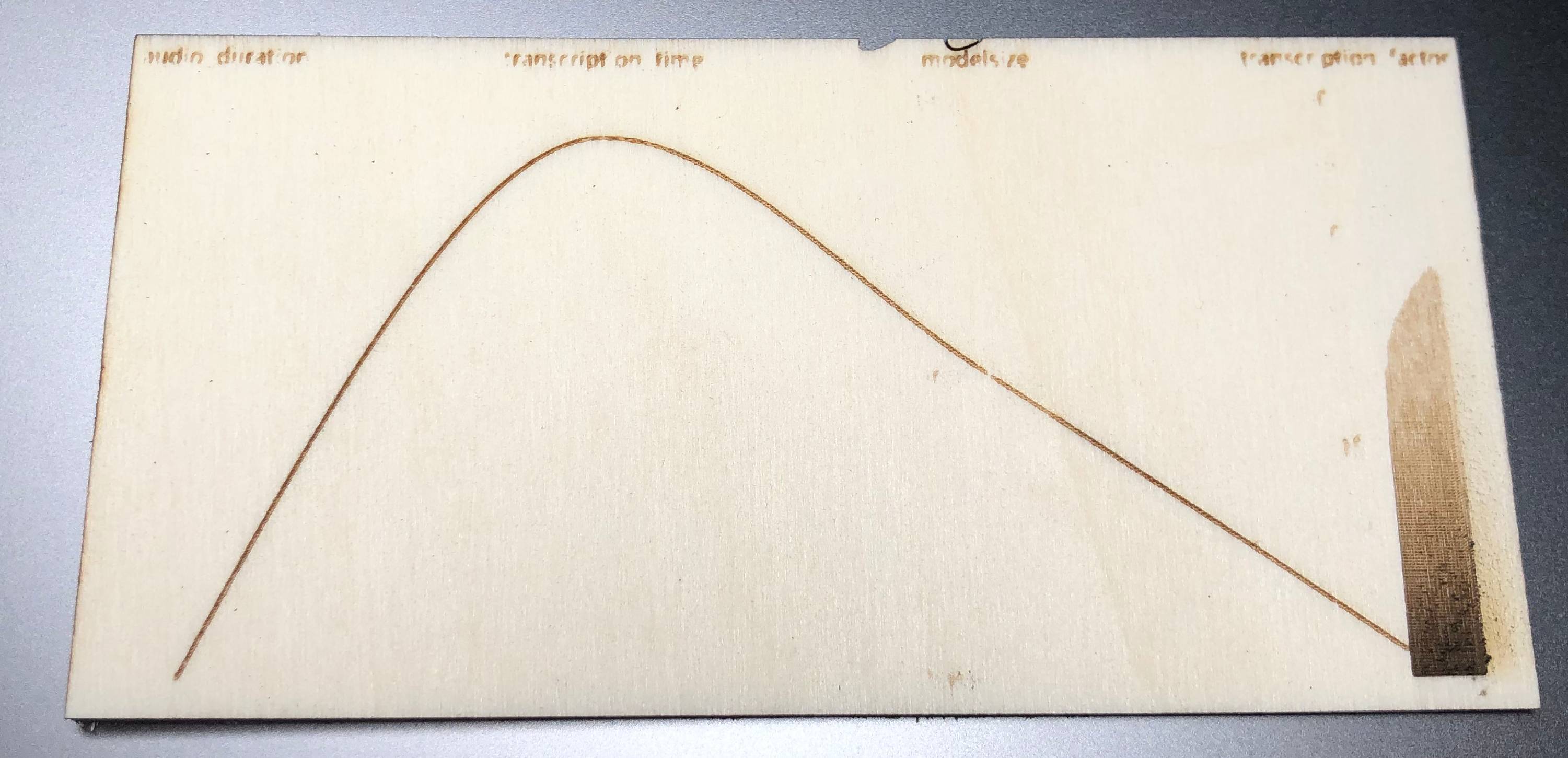

Here's a laser-cut version 🔥 of a W&B parallel coordinates plot for the large model! Why, you ask? You should really be asking, "Why not?"

The Colab

If you'd like to follow along, you can find code for Whisper in Python and Whisper.cpp in C++ in the Colab:

The Code, in Python

Let's get started and see Whisper in action. To start, we'll run this code:

whisper chip-huyen-ml-research.mp3 --model tiny --language=en

1️⃣ Collect Data

For our experiment here, we need to collect the data (in this case: audio from our podcast). We'll use yt-dlp to download all the episodes from the Gradient Dissent Youtube playlist.

Installation

Now, run:

# Install yt-dlppython3 -m pip install -U yt-dlp## Download all episodes as MP3 filesyt-dlp -i -x --yes-playlist --audio-format mp3 https://www.youtube.com/playlist?list=PLD80i8An1OEEb1jP0sjEyiLG8ULRXFob_

2️⃣ Install Dependencies

Next, we'll install the libraries and code that we need to transcribe the fresh data:

- wandb: to track experiments and version our models with Weights & Biases

- whisper: to transcribe audio files with Whisper

- setuptools-rust: used by Whisper for tokenization

- ffmpeg-python: used by Whisper for down-mixing and resampling

Installation and Initialization

!pip install wandb -qqq!pip install git+https://github.com/openai/whisper.git -qqq!pip install setuptools-rust!pip install ffmpeg-python -qqqimport wandbwandb.login(host="https://api.wandb.ai")from google.colab import drivedrive.mount('/content/gdrive')

3️⃣ Transcribe a single file

This is a simple example of how to use Whisper in Python:

import whispermodel = whisper.load_model("base")result = model.transcribe("audio.mp3")print(result["text"])

4️⃣ Track a minimalistic experiment

This is a simple example how to use W&B Experiment Tracking in Python:

import wandbwith wandb.init(project="gradient-dissent-transcription"):train_acc = 0.7train_loss = 0.3wandb.log({'accuracy': train_acc, 'loss': train_loss})

5️⃣ Version Files With W&B Artifacts

This is a simple example how to use W&B Artifacts in Python:

with wandb.init(project="gradient-dissent-transcription"):transcript = wandb.Artifact('transcript', type="transcription")transcript.add_file('transcript.txt')run.log_artifact(transcript)

6️⃣ Apply Steps 3️⃣ + 4️⃣ + 5️⃣ to Audio Collection

Based on the simple examples above, we'll combine the steps transcribe, wandb.log, and wandb.Artifact to transcribe an entire collection of audio files!

import whisperimport wandbfrom pathlib import Pathimport timeimport ffmpeg# Specify project settingsPROJECT_NAME="gradient-dissent-transcription"JOB_DATA_UPLOAD="data-upload"JOB_TRANSCRIPTION="transcription"ARTIFACT_VERSION="latest"AUDIOFORMAT = 'mp3'MODELSIZE = 'tiny' # tiny, base, small, medium, large# Load the Whisper model to perform transcriptionmodel = whisper.load_model(MODELSIZE)# Inititialize W&B Projectrun = wandb.init(project=PROJECT_NAME, job_type=JOB_TRANSCRIPTION)# Download raw Gradient Dissent audio data from W&B Artifactsartifact = run.use_artifact('hans-ramsl/gradient-dissent-transcription/gradient-dissent-audio:'+ARTIFACT_VERSION, type='raw_audio')artifact_dir = artifact.download(root="/content/")wandb.finish()# Path to all audio files in `artifact_dir`audiofiles = Path(artifact_dir).glob('*.'+AUDIOFORMAT)# Iterate over all audio filesfor audio_file in audiofiles:# Specify the path to where the audio file is storedpath_to_audio_file = str(audio_file)# Measure start timestart = time.time()# Transcribe. Here is where the heavy-lifting takes place. 🫀🏋🏻result = model.transcribe(path_to_audio_file)# Measure duration of transcription timetranscription_time = time.time()-start# Define a path to transcript filepath_to_transcript_file = path_to_audio_file + '.txt'# Specify parameters to be loggedduration = ffmpeg.probe(path_to_audio_file)['format']['duration']transcription_factor = float(duration) / float(transcription_time)# Save transcription to local text filewith open(path_to_transcript_file, 'w') as f:f.write(result['text'])transcript_text = result['text']# Log the local transcript as W&B artifactwith wandb.init(project=PROJECT_NAME, job_type=JOB_TRANSCRIPTION) as run:# Build a transcription tabletranscript_table = wandb.Table(columns=['audio_file', 'transcript', 'audio_path', 'audio_format', 'modelsize', 'transcription_time', 'audio_duration', 'transcription_factor'])# Add audio file, transcription and parameters to tabletranscript_table.add_data(wandb.Audio(path_to_audio_file), transcript_text, path_to_audio_file, AUDIOFORMAT, MODELSIZE, transcription_time, duration, transcription_factor)transcript_artifact = wandb.Artifact('transcript', type="transcription")transcript_artifact.add(transcript_table, 'transcription_table')# Log artifact including table to W&Brun.log_artifact(transcript_artifact)# Log parameters to Runs table (Experiment Tracking)run.log({'transcript': transcript_text,'audio_path': path_to_audio_file,'audio_format': AUDIOFORMAT,'modelsize': MODELSIZE,'transcription_time': transcription_time,'audio_duration': duration,'transcription_factor': transcription_factor})

The Code, in C++

🤓 💻 If you want to see the C++ code we use, find it in the Colab.

Here is a short preview of the command line usage:

#!/bin/bashinput_file="$1"model_size="$2"cores="$3"output_file=$(realpath "${input_file%.*}.wav")yes | ffmpeg -hide_banner -loglevel error -i $input_file -acodec pcm_s16le -ac 1 -ar 16000 $(realpath $output_file)exec /Users/hans/code/whisper.cpp/main -m /Users/hans/code/whisper.cpp/models/ggml-$model_size.bin -l en -t $cores -f $(realpath $output_file) > /Users/hans/code/whisper.cpp/transcriptions/temp-transcript.txt

After successfully running steps 1️⃣ - 6️⃣, we can now visualize the results and learn.

7️⃣ Compare Model Sizes

W&B makes it really easy to analyze the performance of those models. During experiment tracking, we tracked parameters such as transcription_factor or transcript. Below, you can see that the highest relative speed is 55.158, and the lowest 1.199.

But why is there such a huge difference in speed performance? Let's find out.

Relative Speed Analysis

Run set

470

One episode, many transcriptions

To find out why there is so much variance, we'll look at one specific episode:

Pete Warden — Practical Applications of TinyML.

We transcribed this episode with different model sizes of Whisper, both on the original Python version on GPU and using the C++ port (Whisper.cpp):

- 🚤 tiny

- 🛥️ base

- 🚢 large

Speed here is, in fact, dependent on a few different factors in tandem, such as:

- The code we run

- Whether we're using GPUs or CPUs

- The size of the model

We'll dig into those factors in more detail below.

In the next figure, the Python on GPU is green 🟢 and the C++ on CPUs (Mac M1, 8 Cores) is blue 🔵. The GPU we used here is the NVIDIA A100, with a max power consumption of 400 W, while the CPUs of the M1 Pro have a max power consumption of 68.5W. The CPU energy consumption 78% lower.

Pete Warden (2021-10-21)

6

🚢 Large Models

For large models we can see that there is a low variance in transcription speed. The range is from 1.199 to 2.042 or 1.7x speed Δ.

🚢 Large Models

75

🛥️ Base Models

For base models, we can see that there is a little higher variance in transcription speed. The range is from 9.953 to 21.445 or 2.15x speed Δ.

🛥️ Base Models

75

🚤 Tiny Models

For tiny models, we can see that there is a very high variance in transcription speed. The range is from 5.819 to 50 or 8.6x speed Δ.

🚤 Tiny Models

224

Model Performance

This parallel coordinates plot shows how the different model sizes perform in terms of transcription time and relative transcription speed.

🚤 vs. 🛥️ vs. 🚢 Model performance

374

Model Performance (by model size)

This grouped parallel coordinates plot summarizes model performance and shows that tiny models have a transcription factor of ~ 38x on average, base models ~18x and large models ~ 1.6x

🚤 vs. 🛥️ vs. 🚢 Model performance (by model size)

374

Access all transcript files

All episodes that have been transcribed can be seen below.

Click on any of the transcription text files and wait a little. You will be able to see the full transcript of a podcast episode.

💡

gradient-dissent-transcript

Cost Analysis and Conclusion

It's worth noting that translation services, whether human or machine, are never quite perfect. If you need word-for-word accuracy, we recommend spot-checking and improving where necessary.

But Whisper's outputs, especially the larger models, were really impressive. And what's better? They're as close to free as they can be:

| Model | Power Consumption | Cost in Germany in December 2022 |

|---|---|---|

| Human transcription | - | 54 |

| Amazon Transcribe | - | 1.44 |

| Whisper (GPU) | 0.4 kWh | 0.2136 |

| Whisper (M1 CPU) | 0.069 kWh | 0.037 |

Add a comment

Iterate on AI agents and models faster. Try Weights & Biases today.