Can Neural Image Generators Be Detected?

In this article, we take a look at whether images generated by neural networks are distinguishable from real images, and discover the fakes which are the hardest to detect.

Created on March 3|Last edited on November 23

Comment

In recent years, the sophistication of image-generating neural networks has advanced dramatically — to the point where some models produce images which are almost indistinguishable from their real counterparts. In this article, we explore whether images generated by neural networks are detectable, and discover the models which produce fake images that are the most difficult to detect.

Table of Contents

Are CNN-generated images hard to distinguish from real images?PerformanceMisclassified PredictionsAll Predictions

Are CNN-generated images hard to distinguish from real images?

CNNDetection shows that a classifier trained to detect images generated by only one GAN can detect those generated by many other models.

To learn more about the paper and the GANs featured in this report checkout the accompanying 2 Minute Papers video.

Performance

The Premise

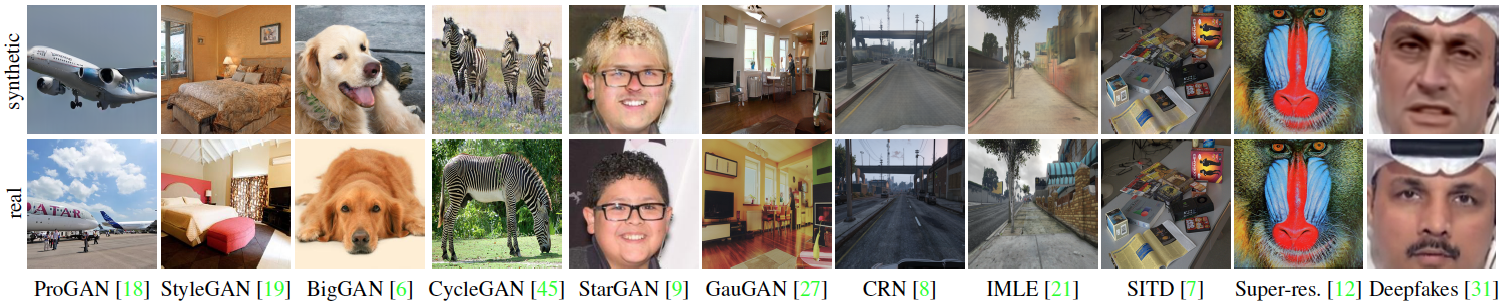

In this paper, the authors ask whether it is possible to create a 'universal' detector for telling apart real images from these generated by a CNN, regardless of the architecture or dataset used. To test this, they collected a dataset consisting of fake images generated by 11 different CNN-based image generator models, chosen to span the space of commonly used architectures today (ProGAN, StyleGAN, BigGAN, CycleGAN, StarGAN, GauGAN, DeepFakes, cascaded refinement networks, implicit maximum likelihood estimation, second-order attention super-resolution, seeing-in-the-dark).

Can the Detector Generalize?

The authors then demonstrate that, with careful pre- and post-processing and data augmentation, a standard image classifier trained on only one specific CNN generator (ProGAN) is able to generalize surprisingly well to unseen architectures, datasets, and training methods (including the just released StyleGAN2).

This is because all these GANs share foundational elements (the convolutional neural network building blocks) that bind together all of these techniques.

Their findings suggest the intriguing possibility that today's CNN-generated images share some common systematic flaws, preventing them from achieving realistic image synthesis.

In the sections below, we dive deeper into how the detector, which was trained specifically on ProGAN, generalizes to other GANs. We also look at some of the predictions the detector makes and examples it gets wrong.

Run set

13

Misclassified Predictions

Run set

13

All Predictions

Run set

13

Add a comment

It's a great paper.

Reply

Tags: Intermediate, Computer Vision, GenAI, Experiment, Research, GAN, Panels, Plots, Slider, Sweeps

Iterate on AI agents and models faster. Try Weights & Biases today.