Free for students, educators, and academic researchers. Always.

Trusted by leading universities, top researchers, and over 500,000 ML practitioners

Trusted and cited by hundreds of cutting-edge researchers

Resources for Educators, Teaching Assistants, and Students

W&B for research & education

Automated Logging for Reproducible Research

A Primer on Collaborative Research with DALL-E mini

Sharing Research Findings in W&B Reports

How to Create Publication-Ready Graphics with W&B

An Example of W&B Reports as Classroom Assignments

How to cite Weights & Biases

If you used W&B and we helped make your research successful, we’d love to hear about it. You can cite us with the information to the right but what we’d like most is to hear from you about your work. Email us at research@wandb.com and we’ll get in touch.

title = {Experiment Tracking with Weights and Biases},

year = {2020},

note = {Software available from wandb.com},

url={https://www.wandb.com/},

author = {Biewald, Lukas},

}

Log everything so you lose nothing

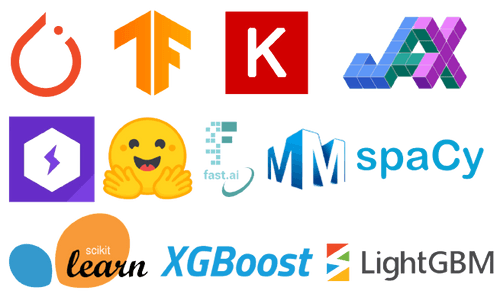

Integrated with every popular framework and thousands of ML repos

Weights & Biases plays well with others. From PyTorch, Keras, and JAX to niche repos across the ML landscape, chances are, you’ll find us integrated there. Check out our most popular integrations (and how they work) in our docs.

Want to host a W&B event at your university? Click on the button to your left and we'll get in touch.

Learn from the experts

Collaborate with your team in real-time

The Weights & Biases end-to-end AI developer platform

Weave

Models

The Weights & Biases platform helps you streamline your workflow from end to end

Models

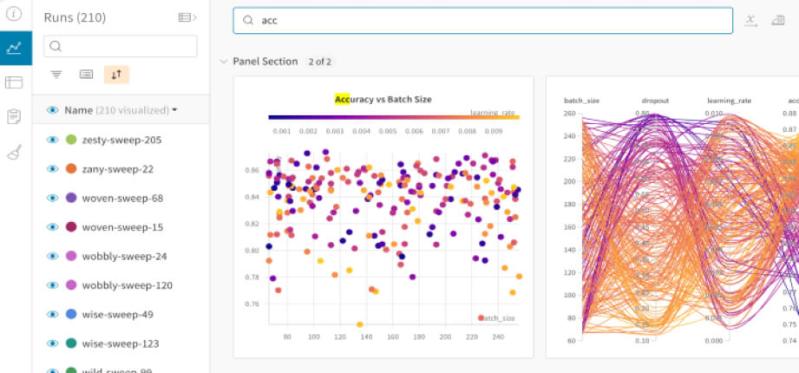

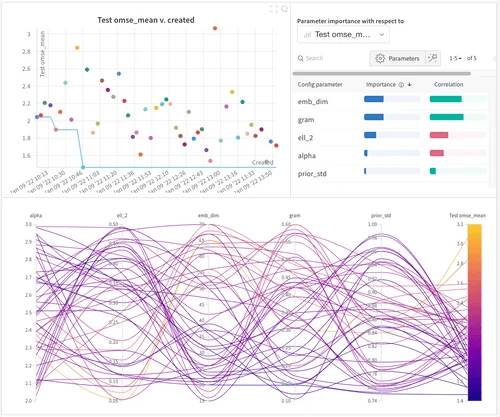

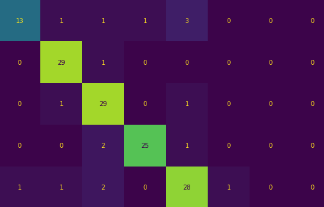

Experiments

Track and visualize your ML experiments

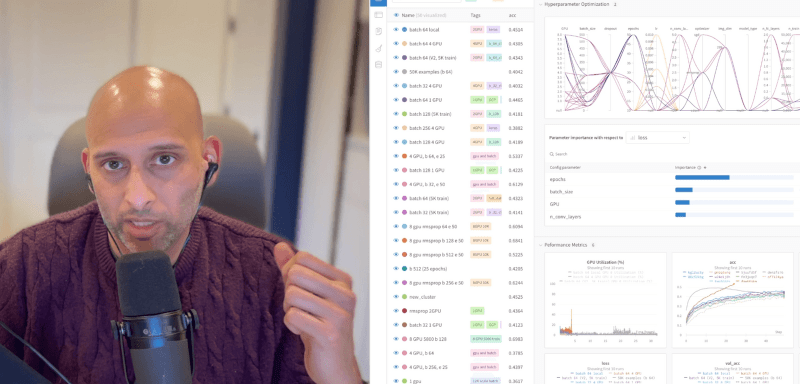

Sweeps

Optimize your hyperparameters

Registry

Publish and share your ML models and datasets

Automations

Trigger workflows automatically

Weave

Traces

Explore and

debug LLMs

Evaluations

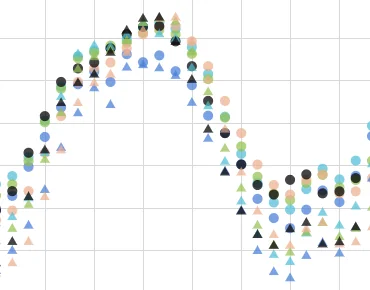

Rigorous evaluations of GenAI applications

Track, compare, and visualize your ML models with 5 lines of code

Quickly and easily implement experiment logging by adding just a few lines to your script and start logging results. Our lightweight integration works with any Python script.

# Flexible integration for any Python script

import wandb

# 1. Start a W&B run

wandb.init(project='gpt3')

# 2. Save model inputs and hyperparameters

config = wandb.config

config.learning_rate = 0.01

# Model training here

# 3. Log metrics over time to visualize performance

wandb.log({"loss": loss})

import wandb

# 1. Start a W&B run

wandb.init(project='gpt3')

# 2. Save model inputs and hyperparameters

config = wandb.config

config.learning_rate = 0.01

# Model training here

# 3. Log metrics over time to visualize performance

with tf.Session() as sess:

# ...

wandb.tensorflow.log(tf.summary.merge_all())

import wandb

# 1. Start a new run

wandb.init(project="gpt-3")

# 2. Save model inputs and hyperparameters

config = wandb.config

config.learning_rate = 0.01

# 3. Log gradients and model parameters

wandb.watch(model)

for batch_idx, (data, target) in

enumerate(train_loader):

if batch_idx % args.log_interval == 0:

# 4. Log metrics to visualize performance

wandb.log({"loss": loss})

import wandb

from wandb.keras import WandbCallback

# 1. Start a new run

wandb.init(project="gpt-3")

# 2. Save model inputs and hyperparameters

config = wandb.config

config.learning_rate = 0.01

... Define a model

# 3. Log layer dimensions and metrics over time

model.fit(X_train, y_train, validation_data=(X_test, y_test),

callbacks=[WandbCallback()])

import wandb

wandb.init(project="visualize-sklearn")

# Model training here

# Log classifier visualizations

wandb.sklearn.plot_classifier(clf, X_train, X_test, y_train,

y_test, y_pred, y_probas, labels, model_name='SVC',

feature_names=None)

# Log regression visualizations

wandb.sklearn.plot_regressor(reg, X_train,

X_test, y_train, y_test, model_name='Ridge')

# Log clustering visualizations

wandb.sklearn.plot_clusterer(kmeans, X_train, cluster_labels, labels=None, model_name='KMeans')

# 1. Import wandb and login

import wandb

wandb.login()

# 2. Define which wandb project to log to and name your run

wandb.init(project="gpt-3", run_name='gpt-3-base-high-lr')

# 3. Add wandb in your Hugging Face `TrainingArguments`

args = TrainingArguments(... , report_to='wandb')

# 4. W&B logging will begin automatically when your start training your Trainer

trainer = Trainer(... , args=args)

trainer.train()

import wandb

# 1. Start a new run

wandb.init(project="visualize-models",

name="xgboost")

# 2. Add the callback

bst = xgboost.train(param, xg_train, num_round,

watchlist, callbacks=

[wandb.xgboost.wandb_callback()])

# Get predictions

pred = bst.predict(xg_test)