EVALUATIONS

Measure and iterate on AI application and agent performance

Iterate on your AI applications by experimenting with LLMs, prompts, RAG, agents, fine-tuning, and guardrails. Use Weave’s evaluation framework and scoring tools to measure the impact of improvements across multiple dimensions—including accuracy, latency, cost, and user experience. Centrally track evaluation results and lineage for reproducibility, sharing, and rapid iteration.

Flexible evaluation framework

Weave evaluations combine a test dataset with a set of scorers, making them flexible to work with. Weave aggregates the scores for each evaluation, allowing you to compare different evaluations side by side. You can also drill down into individual examples within an evaluation to understand where your prompt or model selection needs improvement.

AI system of record

Centrally track all evaluation data to enable reproducibility, collaboration, and governance. Trace lineage back to LLMs used in your application to make continuous improvements. Weave automatically versions your code, datasets, and scorers by tracking changes between experiments, enabling you to pinpoint performance drivers across evaluations.

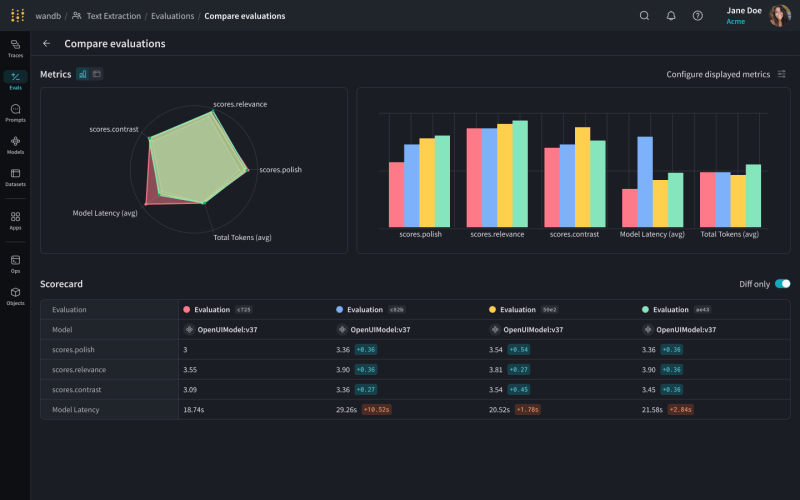

Powerful visual comparisons

Use powerful visualizations for objective, precise comparisons between evaluations.

Pre-built scorers or bring your own

Weave comes out of the box with a slew of industry-standard scorers. We also make it easy to define your own.

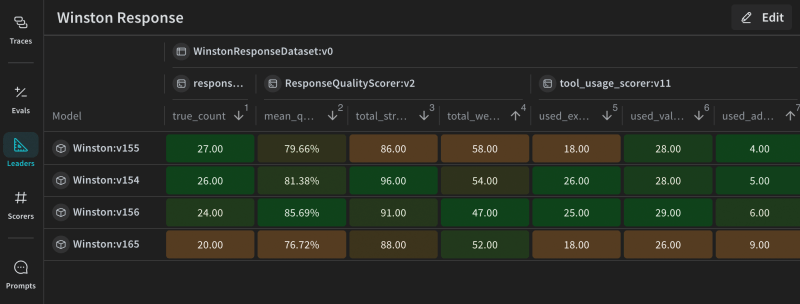

Leaderboards

Aggregate evaluations into leaderboards featuring the best performers and share them across your organization.

Online evaluations

Run evaluations on live incoming production traces, which is useful when you don’t have a curated evaluation dataset.

Get started with AI evaluations

Explore Weights & Biases

Learn more about Weave

The Weights & Biases platform helps you streamline your workflow from end to end

Models

Experiments

Track and visualize your ML experiments

Sweeps

Optimize your hyperparameters

Registry

Publish and share your ML models and datasets

Automations

Trigger workflows automatically

Weave

Traces

Explore and

debug LLMs

Evaluations

Rigorous evaluations of GenAI applications