GUARDRAILS

Block prompt attacks and harmful outputs to safeguard your users and brand

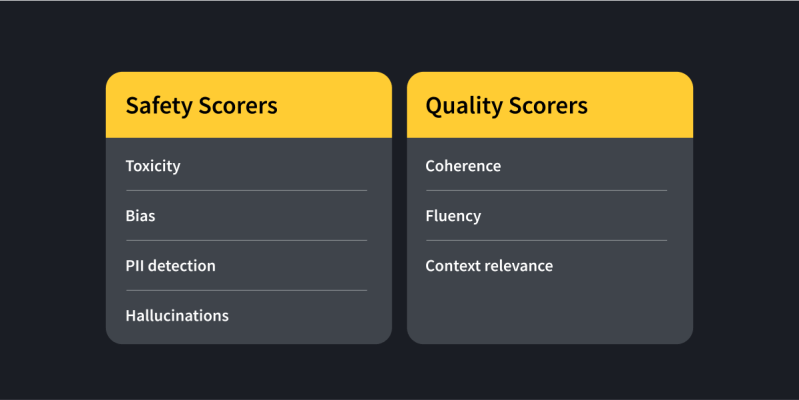

Weave Guardrails offers pre-built scorers for safety and quality to support responsible AI. Safety scorers include toxicity, bias, PII detection, and hallucinations, and quality scores include coherence, fluency and context relevance.

You can run scorers on any LLM call—on user inputs they detect and mitigate malicious activities such as prompt injection, and on AI outputs they identify and prevent hallucinations or inappropriate content. Since every input and output is logged in Weave, you can review scorer performance over time to improve application quality.

Scorers

Scorers evaluate AI agent and application inputs and outputs to measure safety and quality or any other metric you choose. Weave provides out-of-the-box, API-based scorers—built by Weights & Biases experts—for safety and quality, along with specialized versions for RAG applications. Alternatively, you can easily integrate with your favorite guardrails like NeMo and Amazon Bedrock Guardrails, or you can build your own.

Hallucination prevention

The non-deterministic nature of LLMs requires modifying AI outputs when harmful, inappropriate, or off-brand content is detected. Weave enables real-time adjustments to application behavior to mitigate the impact of hallucinations.

Programmable safeguards

Upon detection of unsafe conditions or irrelevant responses, Weave automatically routes your agent or AI application to an alternative flow that executes your specified custom exception code, ensuring the safety of users and your brand.

Data privacy protection

Nefarious parties may attempt to pilfer data from your AI application or confuse your agents. Weave intercepts prompt attacks to prevent PII leakage by filtering LLM outputs.

Explore Weights & Biases

Learn more about Weave

The Weights & Biases platform helps you streamline your workflow from end to end

Models

Experiments

Track and visualize your ML experiments

Sweeps

Optimize your hyperparameters

Registry

Publish and share your ML models and datasets

Automations

Trigger workflows automatically

Weave

Traces

Explore and

debug LLMs

Evaluations

Rigorous evaluations of GenAI applications