INFERENCE

Instantly access top open-source LLMs and serve fine-tuned models

W&B Inference powered by CoreWeave provides API and playground access to leading open-source LLMs allowing you to develop AI applications and agents without needing to sign up for a hosting provider or deploy models on your own. You can also bring your own trained Low Rank Adaptation (LoRA) weights to run serverless inference with fine-tuned models.

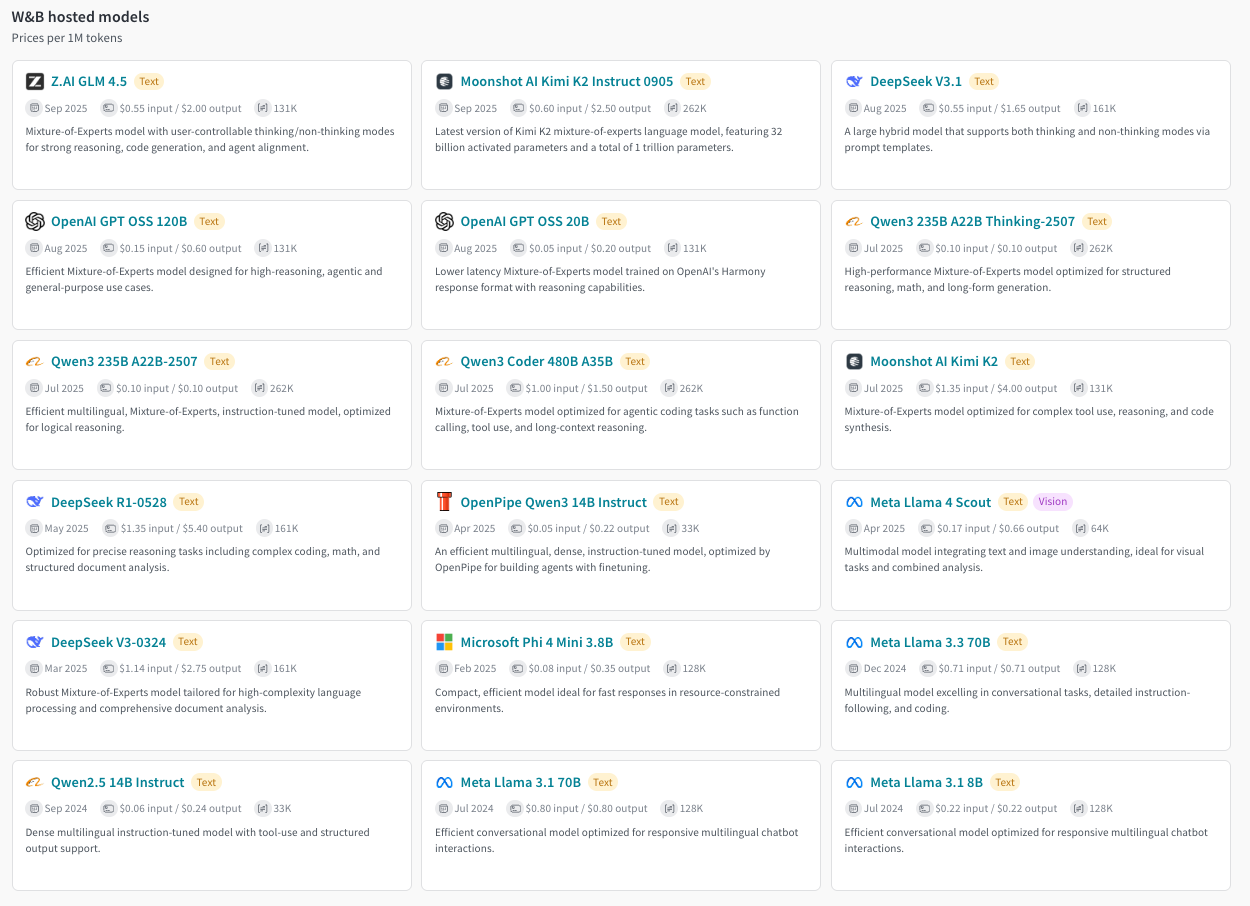

Available models

Z.AI GLM 4.5

Text

/

Moonshot AI Kimi K2 Instruct 0905

Text

/

Deepseek V3.1

Text

/

OpenAI GPT OSS 20B

Text

/

OpenAI GPT OSS 120B

Text

/

Qwen3 30B A3B

Text

/

Qwen3 235B A22B-2507

Text

/

Qwen3 Coder 480B A35B

Text

/

Qwen3 235B A22B Thinking-2507

Text

/

MoonshotAI Kimi K2

Text

/

DeepSeek R1-0528

Text

/

OpenPipe Qwen3 14B Instruct

Text

/

Meta Llama 4 Scout

Text

Vision

/

DeepSeek V3-0324

Text

/

Microsoft Phi 4 Mini 3.8B

Text

/

Meta Llama 3.3 70B

Text

/

Qwen 2.5 14B Instruct

Text

/

import openai

import weave

# Weave autopatches OpenAI to log calls to Weave

weave.init("<team>/<project>")

client = openai.OpenAI(

# The custom base URL points to Inference

base_url='https://api.inference.wandb.ai/v1',

# Get your API key from https://wandb.ai/authorize

# Consider setting it in the environment as OPENAI_API_KEY instead for safety

api_key="<your-apikey>",

# Team and project are required for usage tracking

project="<team>/<project>",

)

response = client.chat.completions.create(

model="moonshotai/Kimi-K2-Instruct",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Tell me a joke."}

],

)

print(response.choices[0].message.content)

Quickly explore and switch new models

New models with better performance and pricing pop up all the time, but each new model means another provider, another account, and another API key to deal with.

W&B Inference powered by CoreWeave hosts popular open source models on powerful CoreWeave infrastructure that you can readily access with your existing Weights & Biases account via the SDK or the UI. Test and switch between models quickly without signing up for additional API keys or hosting models yourself.

Access models in playground with zero configuration

Explore open-source models instantly in the playground. No model endpoints or access keys required.

Skip the hassle of configuring model endpoints and custom providers, your Weights & Biases account gives you instant access to a wide selection of powerful open-source foundation models, fully hosted on our infrastructure. Zero configuration needed.

from openai import OpenAI

model_name = f"wandb-artifact:///{WB_TEAM}/{WB_PROJECT}/qwen_lora:latest"

client = OpenAI(

base_url="https://api.inference.wandb.ai/v1",

api_key=API_KEY,

project=f"{WB_TEAM}/{WB_PROJECT}",

)

resp = client.chat.completions.create(

model=model_name,

messages=[{"role": "user", "content": "Say 'Hello World!'"}],

)

print(resp.choices[0].message.content)

Serverless LoRA inference

The evolving continuous learning paradigm for iterating on agents requires AI engineers to switch frequently between training and inference. In practice, that means building a complex pipeline to fetch the latest weights, hot-swap them for inference, and resume training.

With W&B Inference, we handle that complexity for you. Bring your own LoRA weights to serve fine-tuned models without setting up and scaling serving infrastructure for every LoRA iteration.

Read the docs

Easily iterate on AI applications that use open source models

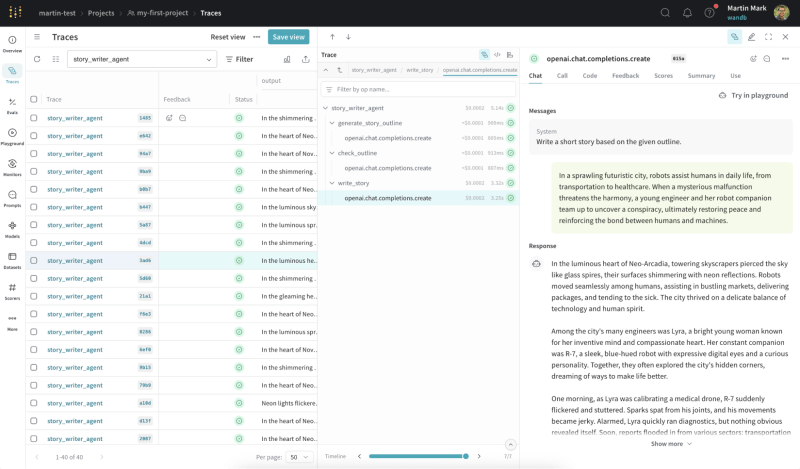

LLM-powered apps need observability tools, but open-source model hosting providers don’t offer them, forcing developers to juggle disconnected platforms for hosting and observability.

W&B Inference runs directly on CoreWeave infrastructure with observability built-in through W&B Weave to evaluate, monitor, and iterate on AI applications and agents—no extra instrumentation, fragmented workflows, or complexity.

Get started for free

Experimentation can quickly get expensive when every new model you test comes with a separate price plan.

We host the latest models, ready for inference within your existing Weights & Biases subscription, keeping costs low and simple with a single plan instead of managing multiple providers.

See our pricing page for more information.

The Weights & Biases end-to-end AI developer platform

Weave

Models

The Weights & Biases platform helps you streamline your workflow from end to end

Models

Experiments

Track and visualize your ML experiments

Sweeps

Optimize your hyperparameters

Registry

Publish and share your ML models and datasets

Automations

Trigger workflows automatically

Weave

Traces

Explore and

debug LLMs

Evaluations

Rigorous evaluations of GenAI applications