AGENTS

Observability tools for building autonomous AI systems that perform multi-step tasks

W&B Weave provides the tools you need to evaluate, monitor, and iterate on agentic AI systems during both development and production. With traces, scorers, guardrails, and a registry, you can confidently build and manage safe, high-quality agents and agentic workflows.

Evaluations

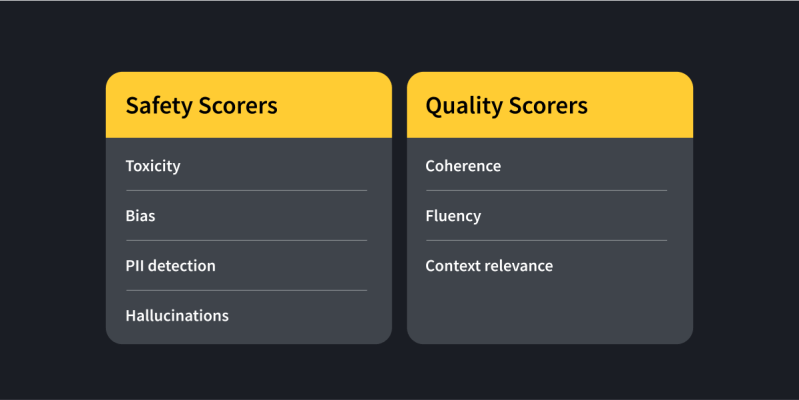

Agentic systems are complex and involve many different components, making eval metrics more difficult to build and track. Weave evaluates agents quickly using pre-built, third-party, or homegrown scorers, increasing iteration velocity.

Debugger

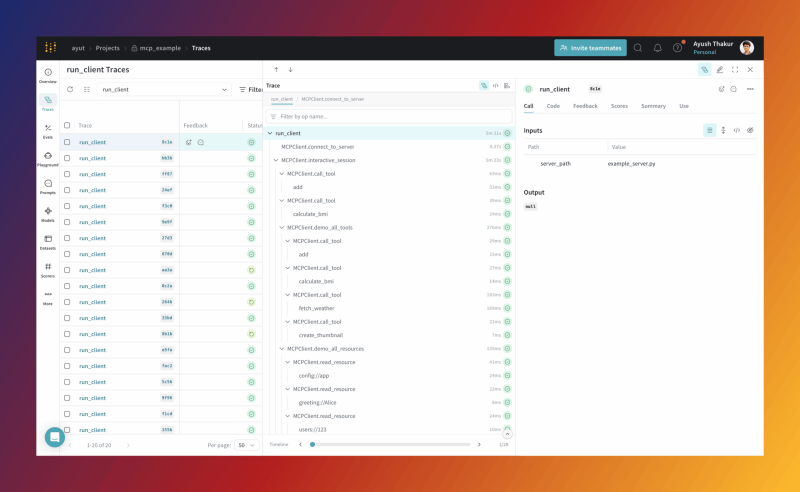

Agents organize their tasks into sequences of steps, each performing a variety of actions such as calling tools, reflecting on outputs, and retrieving relevant data. Debugging and iterating on these complex rollouts can be challenging with traditional call-stack views. Weave clearly visualizes complex agent rollouts to accelerate the iteration process.

Guardrails

Since LLMs are non-deterministic, you need the ability to modify agent inputs and outputs when harmful, inappropriate, or off-brand content is detected. Weave enables real-time adjustments to agent behavior to mitigate the impact of hallucinations and prompt attacks.

Integrations

New agent frameworks are coming to market rapidly, making it difficult to keep up. Through integrations with popular frameworks such as CrewAI and OpenAI Agents SKD, Weave helps you stay future-proof, ensuring your agents won’t become obsolete as new frameworks emerge.

Agent protocols

Tracing interactions between agents and tools they use can be messy. Weave cuts through the chaos—auto-logging Model Context Protocol (MCP) agent traces with one line of code. And dropping soon: plug-and-play tracing support for Google’s Agent2Agent (A2A) protocol, so you can spend less time instrumenting and more time building.

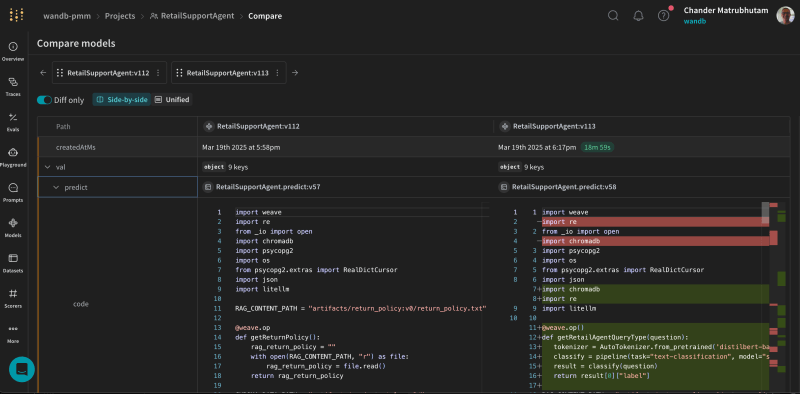

Governance

For compliance and audits, developers need the ability to rebuild specific agents versions and configurations and reproduce events arising in production. Weights & Biases acts as a system of record and allows AI developers to reproduce any task in the agent lifecycle by providing code, dataset, and metadata versioning and lineage tracking.

Explore Weights & Biases

Learn more about Weave

The Weights & Biases platform helps you streamline your workflow from end to end

Models

Experiments

Track and visualize your ML experiments

Sweeps

Optimize your hyperparameters

Registry

Publish and share your ML models and datasets

Automations

Trigger workflows automatically

Weave

Traces

Explore and

debug LLMs

Evaluations

Rigorous evaluations of GenAI applications