SERVERLESS RL

Run RL fine-tuning jobs without worrying about GPUs and infrastructure

Serverless RL lets you post-train LLMs for multi-turn agentic tasks to improve reliability, speed, and costs without provisioning and managing infrastructure. You keep control over key aspects of the reinforcement learning (RL) loop including examples, environment, rewards, and hyperparameters.

We handle the infrastructure for you

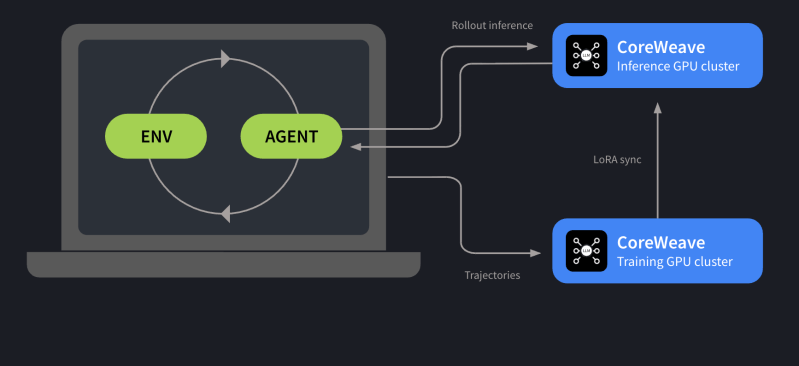

We run the GPUs, memory, and other infrastructure on a managed, elastic CoreWeave cluster that scales to dozens of GPUs or to zero. By splitting inference and training and orchestrating distributed training across multiple runs, we maximize GPU utilization, cut cost, and reduce training time.

Ready access to GPU with auto-scaling

Securing GPU capacity usually requires weeks of reservations and planning, and forgetting to turn them off wastes the budget. With Serverless RL, there is no wait: get instant access to powerful CoreWeave GPUs. The service elastically scales with your training: up when needed, down to zero when not. Avoid idle spend and the “left it on” headache.

Zero infrastructure headaches

Ever leave a training job overnight and return to a CUDA “out of memory” or another runtime error? It happens more than we’d like to admit. With Serverless RL, we fully manage the infrastructure and keep it healthy, so jobs stay resilient and you can focus on training, not babysitting GPU clusters.

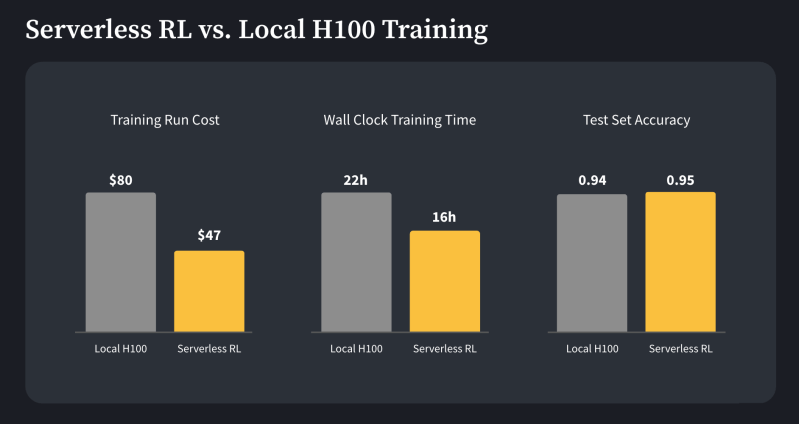

1.4x faster training at 40% lower cost than self-managed

RL training wastes GPU time while waiting for rollouts to complete, inflating cost. W&B Training’s Serverless RL backend on CoreWeave cloud packs jobs to maximize utilization, cutting costs up to 40% and speeding training ~1.4× with no quality loss. Rollouts are run on a shared GPU cluster with per-token billing. Plus, skip provider evaluation and infra scripts. Start your RL run in minutes with just a Weights & Biases account and API key.

Faster feedback loop

RL training isn’t fire-and-forget; it’s iterative: run the agent, debug, tune tools, retrain, repeat. On local infra this loop is painful; each restart reinitializes training and inference, taking minutes to spin up and load the model to GPU memory. With Serverless RL, training and inference run on separate always-on CoreWeave instances, so edits to rollout or loop apply in seconds, not minutes.