Train large scale models and craft the perfect prompts with Weights & Biases

Trusted by the teams building the largest models

Research Engineer – Facebook AI Research

Co-Founder – OpenAI

CEO and Co-Founder – Stability AI

Train concurrently and collaborate in real-time

Avoid wasting dataset and model versioning

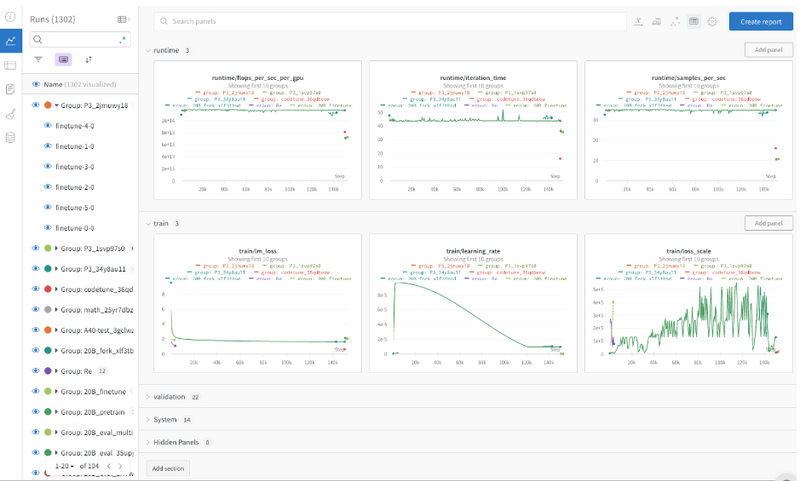

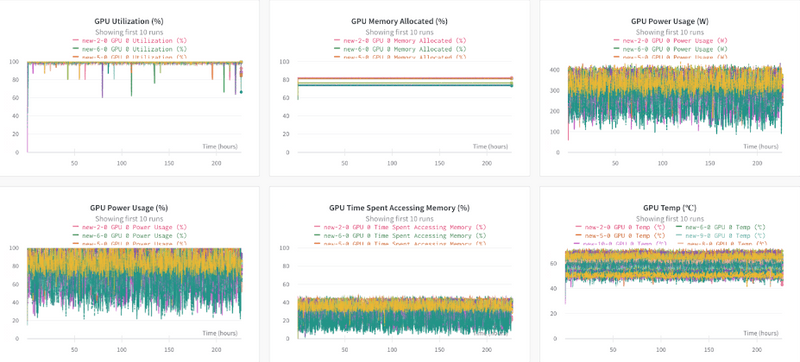

Easily spot failure and waste with Weights & Biases’ real-time model metric and system metric monitoring. Analyze edge cases, highlight regressions, and prune hyperparameters to get the best results from the least resources.

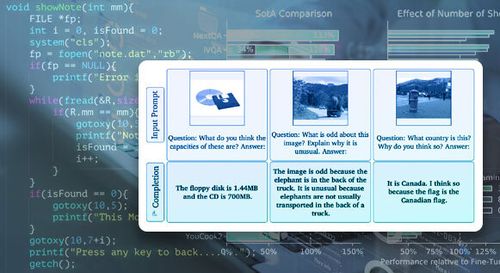

Iterative prompt development

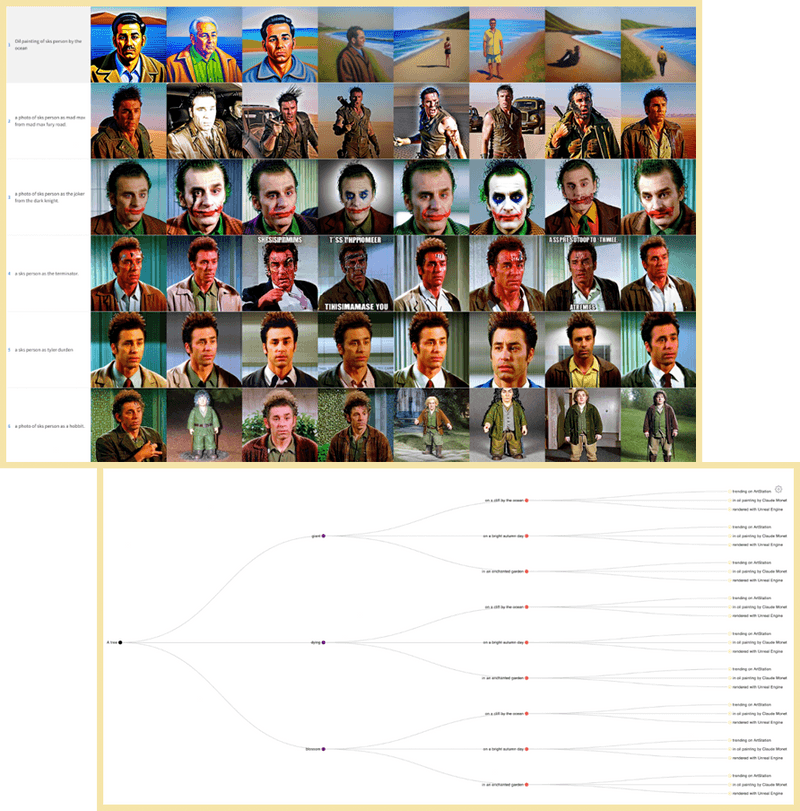

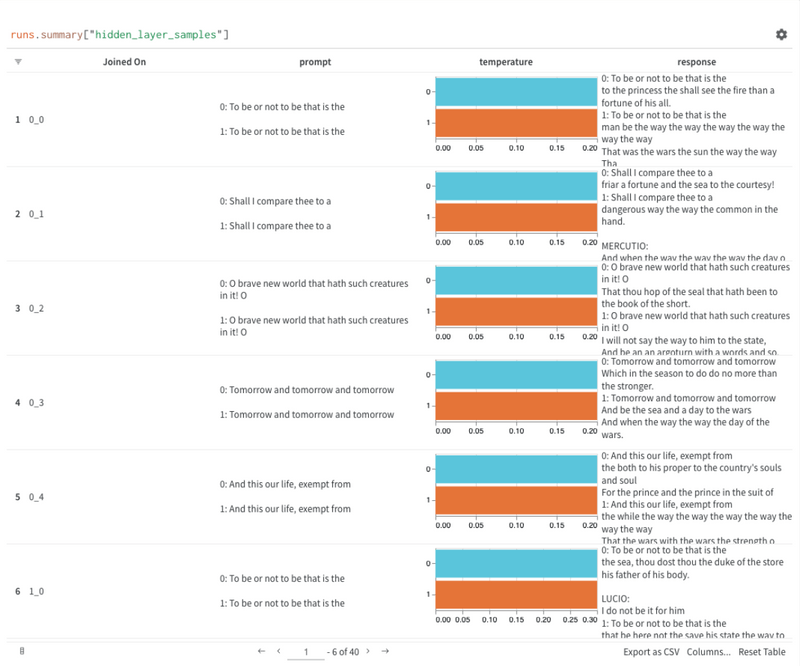

Weights & Biases supports prompt engineering for zero-shot or few-shot tasks by organizing experiments, providing visual and interactive analysis tools, and keeping track of work across chained prompts. It makes exploring a model’s latent space for functional prompts more efficient.

Large scale dataset exploration

Weights & Biases enables dynamic exploration and optimization of large scale model data, predictions, and outputs. It helps you debug datasets and models for continuous improvement and easily share results with your organization.

See Weights & Biases in action

Processing Data for LLMs

Evaluating LLMs

DeepMind Flamingo

Unconditional Image Generation

The Weights & Biases end-to-end AI developer platform

Weave

Models

The Weights & Biases platform helps you streamline your workflow from end to end

Models

Experiments

Track and visualize your ML experiments

Sweeps

Optimize your hyperparameters

Registry

Publish and share your ML models and datasets

Automations

Trigger workflows automatically

Weave

Traces

Explore and

debug LLMs

Evaluations

Rigorous evaluations of GenAI applications