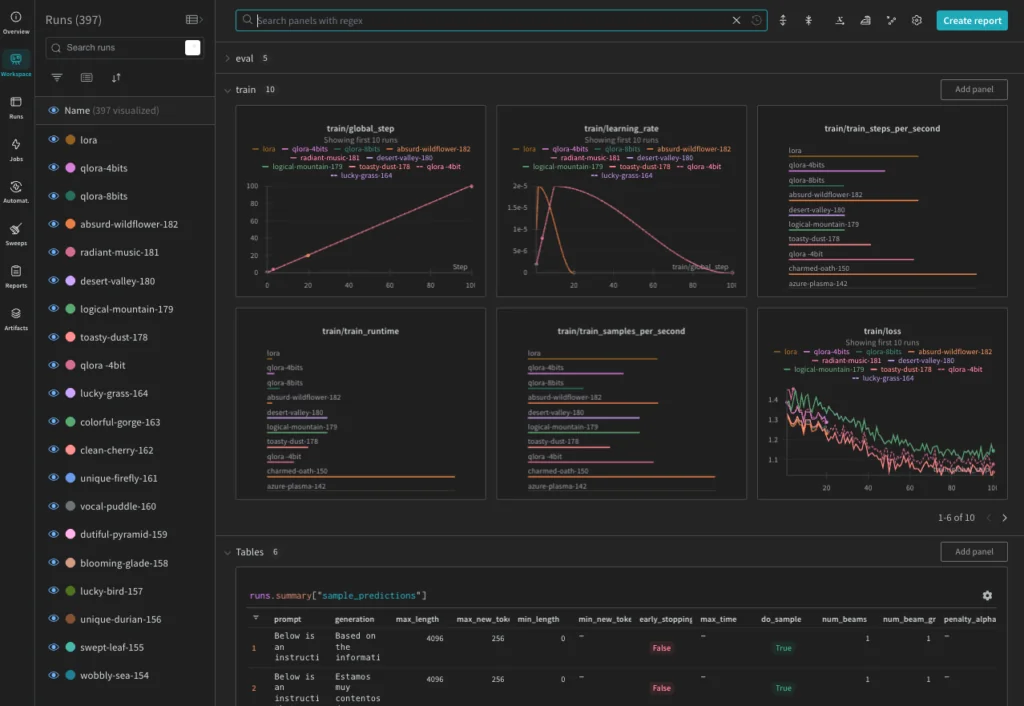

Fine-Tuning on

Weights & Biases

Join the world-class AI teams training and fine-tuning large scale models on Weights & Biases and build your best AI.

The world’s leading ML teams trust W&B

Weights & Biases works with every fine-tuning framework and fine-tuning provider, whether you're fine-tuning LLMs, diffusion models, or even multi-models:

The Hugging Face transformers and TRL libraries have a powerful integration to turn on experiment tracking.

See our Transformers documentation for how to get started.

Examples

Code:

# 1. Define which wandb project to log to

wandb.init(project="llama-4-fine-tune")

# 2. turn on model checkpointing

os.environ["WANDB_LOG_MODEL"] = "checkpoint"

# 3. Add "wandb" in your `TrainingArguments`

args = TrainingArguments(..., report_to="wandb")

# 4. W&B logging will begin automatically when your start training your Trainer

trainer = Trainer(..., args=args)

# OR if using TRL, W&B logging will begin automatically when your start training your Trainer

trainer = SFTTrainer(..., args=args)

# Start training

trainer.train()

Axolotl is built on the Hugging Face transformers Trainer, with a lot of additional modifications optimized for LLM fine-tuning. Pass the wandb arguments below to your config.yml file to turn on W&B logging.

Code:

# pass a project name to turn on W&B logging

wandb_project: llama-4-fine-tune

# "checkpoint" to log model to wandb Artifacts every `save_steps`

# or "end" to log only at the end of training

wandb_log_model: checkpoint

# Optional, your username or W&B Team name

wandb_entity:

# Optional, naming your W&B run

wandb_run_id:

You can also use more advanced W&B settings by setting additional environment variables here.

Lightning is a powerful trainer that lets you get started training in only a few lines. See the W&B Lightning documentation and the Lightning documentation to get started.

You can also use more advanced W&B settings by setting additional environment variables here.

Code:

import wandb

# 1. Start a W&B run

run = wandb.init(project="my_first_project")

# 2. Save model inputs and hyperparameters

config = wandb.config

config.learning_rate = 0.01

# 3. Log metrics to visualize performance over time

for i in range(10):

run.log({"loss": loss})

You can also use more advanced W&B settings by setting additional environment variables here.

MosaicML’s Composer library is a powerful, open source framework for training models and is what powers their LLM Foundry library. The Weights & Biases integration with Composer can be added to training with just a few lines of code.

See the MosaicML Composer documentation for more.

Code:

from composer import Trainer

from composer.loggers import WandBLogger

# initialise the logger

wandb_logger = WandBLogger(

project="llama-4-fine-tune",

log_artifacts=true, # optional

entity= <your W&B username or team name>, # optional

name= <set a name for your W&B run>, # optional

init_kwargs={"group": "high-bs-test"} # optional

)

# pass the wandb_logger to the Trainer, logging will begin on training

trainer = Trainer(..., loggers=[wandb_logger])

You can also use more advanced W&B settings by passing additional wandb.int parameters to the init_kwargs argument. You can also modify additional W&B settings via the environment variables here.

Hugging Face Diffusers is the go-to library for state-of-the-art pretrained diffusion models for generating images, audio, and even 3D structures of molecules.

With our diffusers autologger you can log your generations from a diffusers pipeline to Weights & Biases in just 1 line of code.

Examples:

- A Guide to Prompt Engineering for Stable Diffusion

- PIXART-α: A Diffusion Transformer Model for Text-to-Image Generation

Code:

# import the autolog function

from wandb.integration.diffusers import autolog

# call the W&B autologger before calling the pipeline

autolog(init={"project":"diffusers_logging"})

# Initialize the diffusion pipeline

pipeline = DiffusionPipeline.from_pretrained(

"stabilityai/sdxl-turbo"

)

# call the pipeline to generate the images

images = pipeline("a photograph of a dragon")

OpenAI fine-tuning for GPT-3.5 and GPT-4 is powerful, and with the Weights & Biases integration you can keep track of every experiment, every result and every dataset version used.

See our OpenAI Fine-Tuning documentation for how to get started.

Examples:

- Fine-tuning OpenAI’s GPT with Weights & Biases

- Fine-Tuning ChatGPT for Question Answering

- Does Fine-tuning ChatGPT-3.5 on Gorilla improve API and Tool Usage Peformance?

Code:

from wandb.integration.openai import WandbLogger

# call your OpenAI fine-tuning code here ...

# call .sync to log the results from the fine-tuning job to W&B

WandbLogger.sync(id=openai_fine_tune_job_id, project="My-OpenAI-Fine-Tune")

MosaicML offer fast and efficient fine-tuning and inference, and with the Weights & Biases integration you can keep track of every experiment, every result and every dataset version used.

See the MosaicML Fine-Tuning documentation for how to turn on W&B logging.

Code:

Add the following to your YAML config file to turn on W&B logging:

integrations:

- integration_type: wandb

# Weights and Biases project name

project: llama-4-fine-tuning

# The username or team name the Weights and Biases project belongs to

entity: < your W&B username or team name >

Together.ai offer fast and efficient fine-tuning and inference for the latest open source models, and with the Weights & Biases integration you can keep track of every experiment!

See the Together.ai Fine-Tuning documentation for how to get started with fine-tuning.

Code:

# CLI

together finetune create .... --wandb-api-key $WANDB_API_KEY

# Python

import together

resp = together.Finetune.create(..., wandb_api_key = '1a2b3c4d5e.......')

If using the command line interface, pass your W&B API key to the wandb-api-key argument to turn on W&B logging. If using the python library, you can pass your W&B API key to the wandb_api_key parameter:

The Hugging Face AutoTrain library offers LLM fine-tuning. By passing the --report-to wandb argument you can turn on W&B logging.

Code:

# CLI

autotrain llm ... --report-to wandb

OpenAI fine-tuning for GPT-3.5 and GPT-4 is powerful, and with the Weights & Biases integration you can keep track of every experiment, every result and every dataset version used.

See our OpenAI Fine-Tuning documentation for how to get started.

Examples:

- How to Fine-Tune Your OpenAI GPT-3.5 and GPT-4 Models

- Fine-Tuning ChatGPT for Question Answering

- Does Fine-tuning ChatGPT-3.5 on Gorilla improve API and Tool Usage Performance

Code:

from wandb.integration.openai import WandbLogger

# call your OpenAI fine-tuning code here ...

# call .sync to log the results from the fine-tuning job to W&B

WandbLogger.sync(id=openai_fine_tune_job_id, project="My-OpenAI-Fine-Tune")

Learn how to fine-tune an LLM in our free LLM course

In this free course you will explore the architecture, training techniques, and fine-tuning methods for creating powerful LLMs. Gain theory and hands-on experience from Jonathan Frankle (MosaicML), and other industry leaders, and learn cutting-edge techniques like LoRA and RLHF.

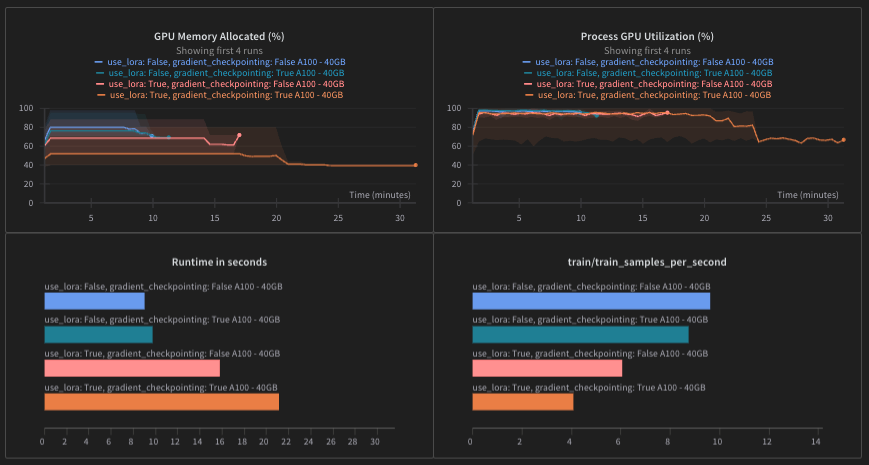

Learn how to fine-tune an LLM with HuggingFace

This interactive W&B report walks you through how to fine-tune an LLM with HuggingFace Trainer, walking through a few popular methods like LoRA and model freezing.

Trusted by the teams building state-of-the-art LLMs

VP of Technology

“W&B gives us a concise look at all projects. We can compare runs, aggregate them all in one place, and intuitively decide what works well and what to try next.”

VP of Product- OpenAI

Product Manager- Cohere

See W&B in action

How to Fine-tune an LLM Part 3: The HuggingFace Trainer

How to Fine-Tune an LLM Part 2: Instruction Tuning Llama 2

How to Fine-Tune an LLM Part 1: Preparing a Dataset for Instruction Tuning

How to Fine-Tune Your OpenAI GPT-3.5 and GPT-4 Models with Weights & Biases

Fine-Tuning a Legal Copilot Using Azure OpenAI and W&B

Neuron Hacking: Can You Fine-Tune an LLM to Act as a Key-Value Store?

Fine-Tuning an Open Source LLM in Amazon SageMaker with W&B

The Weights & Biases platform helps you streamline your workflow from end to end

Models

Experiments

Track and visualize your ML experiments

Sweeps

Optimize your hyperparameters

Model Registry

Register and manage your ML models

Automations

Trigger workflows automatically

Launch

Package and run your ML workflow jobs

Weave

Traces

Explore and

debug LLMs

Evaluations

Rigorous evaluations of GenAI applications