Introduction to the ML Model Registry

In the rapidly changing landscape of machine learning, where having large volumes of high-performing models is the backbone of innovative production solutions, a robust, secure, and accessible infrastructure is essential to managing and organizing your ML teams‘ work.

Enter the ML model registry. A model registry is both the distribution center and centralized hub for ML teams to store, catalog, access, distribute, and deploy their team’s models and the single source of truth for their production models. An effective model registry facilitates seamless model management through the sharing, versioning, and tracking of models. This enables ML teams to collaborate more efficiently, experiment faster, and confidently deploy higher-performing models into production.

For model development, software development, and MLOps teams, having a single unit and central collaboration repository is a huge force multiplier for their ML activities. It is also a crucial component of an effective model CI/CD (Continuous Integration/ Continuous Deployment) workflow.

This enables automated ML model testing, evaluation, deployment, and monitoring. Additionally, model registries are crucial to facilitating a clean hand-off point between ML engineering and DevOps, creating a continuous cycle and process.

In this piece, we’ll walk you through a model registry’s different components and functionalities, integrating one into your workflow, and how to get started with the Weights & Biases Model Registry.

What is a Model Registry in ML?

In ML, a model registry is a centralized repository or model store, similar to a library, that lets you effectively manage and organize machine learning models. It is where models are stored, tracked, versioned, and made accessible to anyone at the company involved in deploying and using models in production.

This will likely include ML practitioners, data scientists, software developers, product managers, and other.

The model registry should provide model lineage for experimentation, model versioning records when models were pushed to production, and annotations from collaborators. It acts as a secure and organized repository that’s accessible across your org, streamlining model development, evaluation, and deployment.

The model registry also allows the entire team to manage the lifecycle of all models in the organization collaboratively. Your data scientists can push trained models to the registry. Once in the registry, the models can be tested, validated, deployed to production by MLOps, and evaluated continuously. This is, in many ways, quite similar to software DevOps.

Let’s look at the benefits in detail:

Benefits of an ML Model Registry

Streamline production model deployment

A machine learning model registry streamlines and enhances the complex model deployment process, allowing you to get better models into production faster and easier. It does this by:

- Centralizing ML model management in one place: No more looking or asking around to find the models you need or wondering where the single source of truth for production is. With a model registry, you’ll always have the latest and greatest models readily available to deploy.

- Automating the deployment process: Trigger automatic evaluation jobs and integrate your model registry with downstream services and REST services to consume the model and serve it in the production environment automatically, saving time and avoiding manual errors.

Enable production model monitoring

Track the performance of deployed models in real-time, with the registry collecting real-time and aggregated metrics on production models. Keep an eye on metrics that matter, such as accuracy, latency, and resource utilization, to make optimization decisions faster. Improve model accuracy, reduce data drift, and avoid bias to ensure fairness and quality in production.

Create a continuous workflow

To enable a model CI/CD workflow that is seamless and automated, the model registry plays an important role at the heart of it all. Integrate the registry with other tools, services, and automations to keep a continuous flow of evaluation and deployment, ensuring that you’re constantly putting out the best models in production.

Improve collaboration and centralization

Seamless, efficient collaboration is crucial when deploying ML models in a production environment; mistakes in deploying the wrong or outdated models can be compounded and publicly glaring. A model registry facilitates teamwork by providing shared access and the ability to incorporate feedback and work on projects together, all within a controlled and organized environment.

Ensure governance and security

Security and governance are important throughout the ML workflow, especially in production. A model registry provides robust security measures to safeguard your models and granular role-based access controls to define permissions across your team.

The model registry should also enforce the documentation and reporting of models, ensuring results are repeatable and reproducible, especially by an auditing user. All of these factors promote compliance with data privacy regulations and internal governance policies.

Where does the Model Registry fit in your MLOps stack?

You can think of the model registry as sitting on the final part of the machine learning workflow right before we get into MLOps and deploying into your production environment:

Outputs from the model experimentation steps are fed into the model registry, which can then serve models into production. All models in the registry are tagged with their appropriate status (production or challenger), and contain full data and model lineage.

From there, users can manage model tasks and the model lifecycle (through version control) and set up automations to trigger pushing models into production.

The model registry also integrates seamlessly with deployment pipelines, facilitating swift and accurate transitions of models from development to production environments. Models in production can be monitored for data drift and decay and, when necessary, rolled back to the model registry for further testing and evaluation, or to update datasets.

Having easy access to make changes in the model registry when decaying models are automatically rolled back ensures that only the highest quality models are always in production.

The Weights & Biases Model Registry

Weights & Biases is the AI development and ML observability platform. ML practitioners, MLOps teams, and ML leaders at the most cutting-edge of AI and LLM organizations rely on W&B as a definitive system of record while boosting individual productivity, scaling production ML, and enabling a streamlined CI/CD ML workflow.

Offering over 20,000 integrations with ML frameworks and libraries and a robust partner ecosystem with all the major cloud and infra providers, the W&B platform seamlessly fits into any industry or use case, and across the entire ML lifecycle.

An effective model registry should be well integrated with your team’s experiment tracking system; with Weights & Biases you can do both seamlessly on the same platform! W&B already serves as your system of record to track experiment runs with different parameter configurations and help extensively with model development.

W&B Registry helps you take that to the next level by taking those successful experiments and storing the trained, production-ready models your team develops in this central repository. With all the data and model lineage, and other model activity details already recorded, users can find exactly what they’re looking for at any given time.

Exploring the Benefits of W&B Model Registry

Weights & Biases users have found tremendous value in establishing W&B Registry as their single source of truth for production models, and to facilitate model handoff and CI/CD. Many users have cited huge improvements in organization and clarity. That’s because, before using W&B Registry, many of these teams were managing production models in their heads, or spreadsheets or notebooks. This lack of documentation or lineage is messy and can lead to rough outcomes, including biased or non-performant models.

One key aspect of W&B Registry is the ability to link models. This provides clear connections between the versions of the registered model with the source artifact and vice versa.

W&B users get a lot of value from linking models, including:

Publishing production-ready models

Users can register or upload their trained ML models from successful experiments into a team registry. This allows the model to be stored and tracked within W&B Registry, bridging the gap between experiment and production activities.

Lifecycle ML model management

Users can view different versions of their registered models and track changes while maintaining a recorded history of all model iterations. By adding or removing aliases to model versions with custom—such as “candidate,” “staging,” and “production,”—they can easily find the candidate versions they’re looking for after running new experiments, updating existing ones, or deprecating outdated models.

Reusability and collaboration

Once a model has been published to the registry, it can be readily consumed by either MLOps engineers looking to deploy the model to production or other ML practitioners looking to use this model in downstream pipelines. The W&B Registry makes consuming models in downstream runs easy via run.use_artifact(model).

Governance and access controls

W&B Registry provides full auditing capabilities on all actions performed on model versions linked to the registry, with full records of state transitions from development, staging, and production to archived. Role-based access controls ensure that only those with the right access levels can move models into a new pipeline stage – including into production.

Automate downstream actions

Add an automation to easily trigger workflow steps when adding a new version to a registered model or adding a new alias. Webhook automations allow users to kick off downstream actions such as model testing and deployment on their own infrastructure with a lightweight connector, like W&B Launch.

Alerting via Slack or Teams notifications

Users can also configure registered models to listen for when new model versions are linked and be automatically notified in their Slack or Teams channel.

How to Get Started with the W&B Model Registry

Getting started with W&B Registry is easy! Once you’ve signed up for your Weights & Biases account, you can follow these steps to use W&B Registry for ML model management.

1) Create a new registered model

We must create a registered model to hold all the candidate models for your modeling task. You can create a registered model interactively via the W&B App (clicking into either the Model Registry tab or Artifact Browser tab) or create a registered model programmatically using the W&B Python SDK.

Select your entity, fill in the details and appropriate tags, and there you have it: your first registered model!

2) Train and log model versions

Now log a model from your training script. You can serialize your model to your disk periodically (and/or at the end of training using the serialization process provided by your modeling library (eg: PyTorch or Keras)).

Next, add your model files to an Artifact type of model. We recommend name-spacing your Artifacts with their associated Run id to stay organized. You can also declare your dataset as a dependency so that it is tracked for reproducibility and auditability.

After logging one or more model versions, you will notice a new model Artifact in your Artifact browser. In this example, you can see the results of logging versions of an artifact.

3) Link ML model versions to the registered model

Now link the different model versions you’ve just trained and logged to the registered model you initially created.

You can do this in the W&B app or programmatically via the Python SDK. To do this in the app, navigate to the model version you want to link and click the link icon. You can then select the target registered model to which you want to link this version.

You can also have the option to add additional aliases.

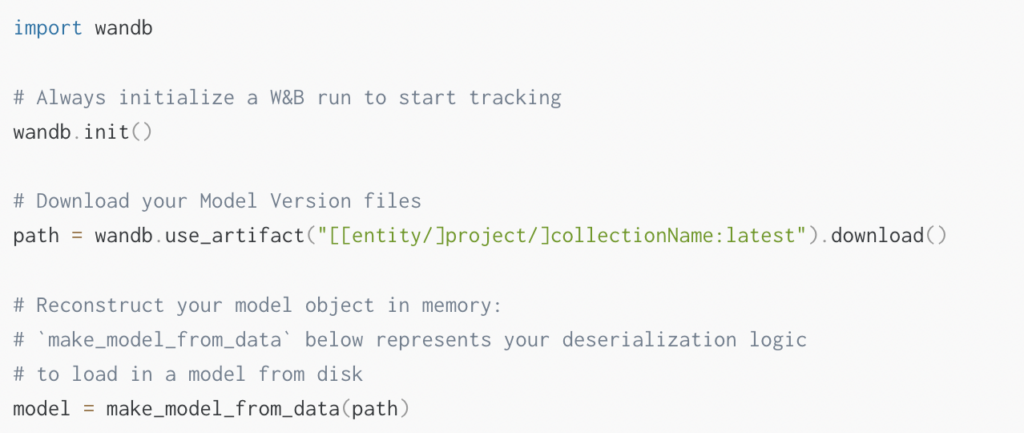

4) Use a model version

Now it’s time to consume the model. Perhaps you want to evaluate its performance, make predictions against a dataset, or even use it in a live production context. Use the following code snippet to use a model with the Python SDK.

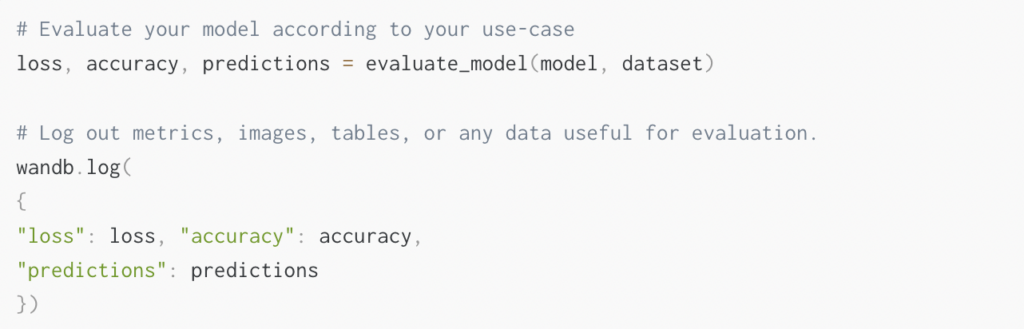

5) Evaluate model performance

You’ll want to evaluate the performance of all those models you’ve trained, perhaps against a test dataset that was not used for training or validation.

After completing the previous step, you can declare a data dependency to your evaluation data and log metrics, media, tables, and anything else useful for evaluation.

6) Promote a model version to production

Once satisfied with your model evaluation, you can specify and promote the version you want to use for production with an alias. Each registered model can have more than one alias, but each can only be assigned to one version at a time. Again, you can add an alias interactively within the W&B app or programmatically via Python SDK.

7) Consume the production model

Finally, use your production model for inference. Refer to step 4 above for how to consume the model in a live production context. You can reference a version within a registered model using different alias strategies: for example, using “latest,” “v3,” or “production.”

8) Build a reporting dashboard

Using W&B Weave panels, you can display any of the Model Registry or Artifact views inside Reports! Build reports that display the metadata, usage, files, lineage, action history audit, or version history analysis – whatever you want to dig into and analyze.

Check out this example of a Model Dashboard:

Conclusion

By now, we’ve established that model registries are essential to the efficient, sophisticated operationalization of ML workflows and projects. Having a well-organized model registry serves as the central hub and single source of all model statuses, and the final hand-off point to MLOps and into production, ensures smooth automated transitions, reduces the chance of errors or low-quality models in production, and gives ML teams peace of mind.