Deliver AI with confidence

import weave

weave.init("quickstart")

@weave.op()

def llm_app(prompt):Improve quality, cost, latency, and safety

Weave works with any LLM and framework and comes with a ton of integrations out of the box

Quality

Accuracy, robustness, relevancy

Cost

Token usage and estimated cost

Latency

Track response times and bottlenecks

Safety

Protect your end users using guardrails

Measure and iterate

Visual comparisons

Use powerful visualizations for objective, precise comparisons

Automatic versioning

Save versions of your datasets, code, and scorers

import openai, weave

weave.init("weave-intro")

@weave.op

def correct_grammar(user_input):

client = openai.OpenAI()

response = client.chat.completions.create(

model="o1-mini",

messages=[{

"role": "user",

"content": "Correct the grammar:\n\n" +

user_input,

}],

)

return response.choices[0].message.content.strip()

result = correct_grammar("That was peace of cake!")

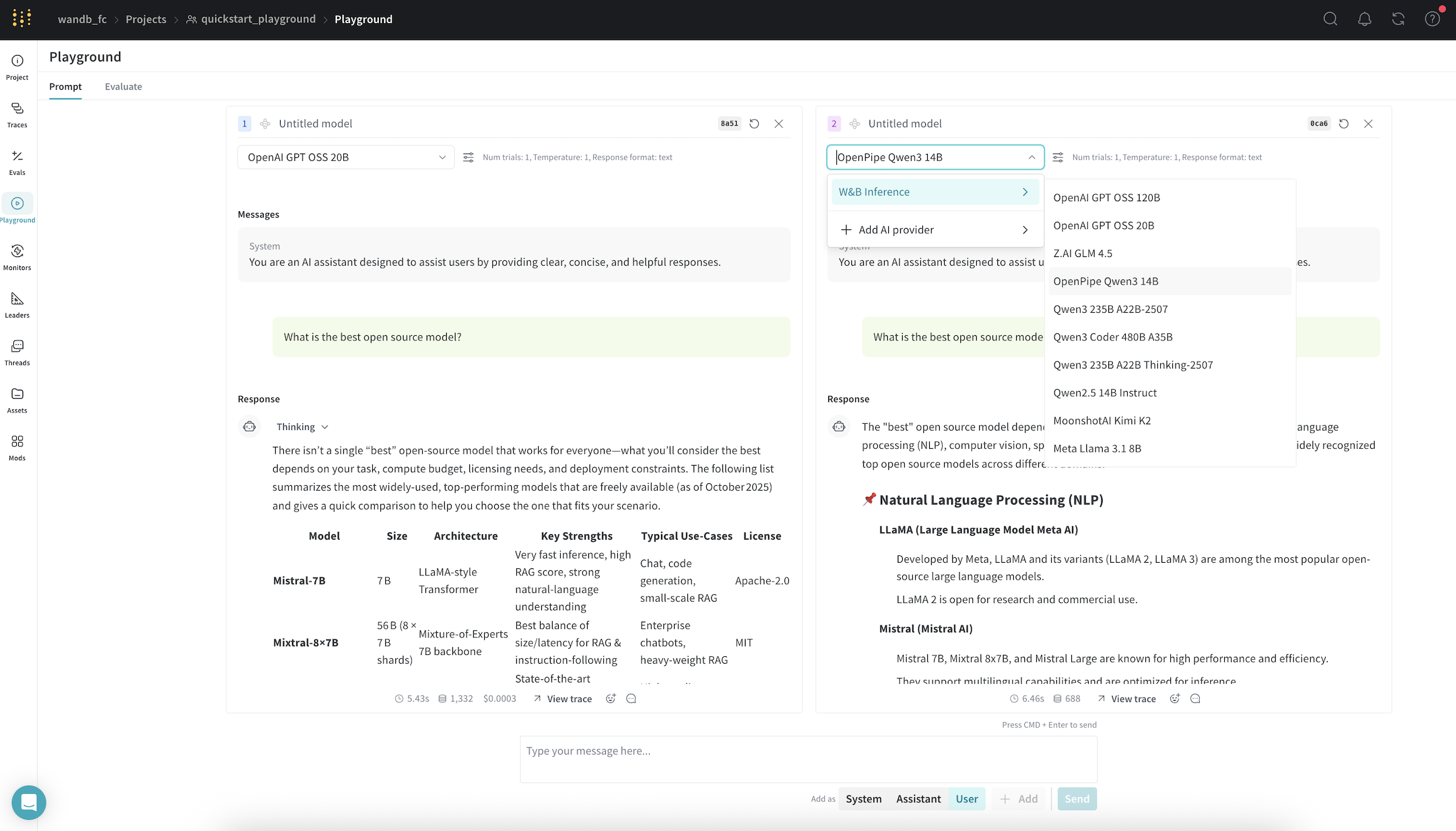

print(result)Playground

Iterate on prompts in an interactive chat interface with any LLM

Leaderboards

Group evaluations into leaderboards featuring the best performers and share across your organization

Log everything for production monitoring and debugging

Debugging with trace trees

Weave organizes logs into an easy to navigate trace tree so you can identify issues

Multimodality

Track any modality—text, code, documents, image, and audio. Other modalities coming soon

Easily work with long form text

View large strings like documents, emails, HTML, and code in their original format

Online evaluations

Score live incoming production traces for monitoring without impacting performance

Observability and governance tools for agentic systems

Build state-of-the-art agents

Supercharge your iteration speed and top the charts

Agent framework and protocol agnostic

Integrates with leading agent frameworks such as OpenAI Agents SDK and protocols such as MCP

import weave

from openai import OpenAI

weave.init("agent-example")

@weave.op()

def my_agent(query: str):

client = OpenAI()

response = client.chat.completions.create(...)

return response

my_agent("What is the weather?")Trace trees purpose-built for agentic systems

Easily visualize agents rollouts to pinpoint issues and improvements

Use our scorers or bring your own

Pre-built scorers

Jumpstart your evals with out-of-box scorers built by our experts

Write your own scorers

Near-infinite flexibility to build custom scoring functions to suit your business

import weave, openai

llm_client = openai.OpenAI()

@weave.op()

def evaluate_output(generated_text, reference_text):

"""

Evaluates AI-generated text against a reference answer.

Args:

generated_text: The text generated by the model

reference_text: The reference text to compare against

Returns:

float: A score between 0-10

"""

system_prompt = """You are an expert evaluator of AI outputs.

Your job is to rate AI-generated text on a scale of 0-10.

Base your rating on how well the generated text matches

the reference text in terms of factual accuracy,

comprehensiveness, and conciseness."""

user_prompt = f"""Reference: {reference_text}

AI Output: {generated_text}

Rate this output from 0-10:"""

response = llm_client.chat.completions.create(

model="gpt-4-turbo",

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_prompt}

],

temperature=0.2

)

# Extract the score from the response

score_text = response.choices[0].message.content

# Parse score (assuming it returns a number between 0-10)

try:

score = float(score_text.strip())

return min(max(score, 0), 10) # Clamp between 0-10

except:

# Fallback score if parsing fails

return 5.0Human feedback

Collect user and expert feedback for real-life testing and evaluation

Third-party scorers

Plug and play off-the-shelf scoring functions from other vendors

Safeguard your users and brand

Access popular open-source models

Playground or API access

Access to leading open-source foundation models