See how Weights & Biases integrates with the ML ecosystem

Regardless of your preferred framework, environment, or workflow, our platform integrates with your existing tools and can be flexibly layered on top of any infrastructure.

Scott McClellan, Senior Director of Data Science and MLOps

NVIDIA

NVIDIA

“Putting AI into production requires enterprises to manage a broad range of processes, including data governance, experiment tracking, workload management, and compute orchestration. Pairing W&B with Run:ai MLOps software with NVIDIA accelerated systems and NVIDIA AI Enterprise software enables enterprises to effectively deploy intelligent applications that solve real-world business challenges.”

Weights & Biases integrates with a wide variety of tools across the ML lifecycle:

Data partners

- Netapp

- Activeloop

- NVIDIA

- Snowflake

- S3

- GCS

- Azure Blob Storage

Data stored in various data sources can be seamlessly logged in W&B for visualization & analysis.

Decisions made in W&B are pushed to data sources for actions (e.g. fix labeling errors, collect more data, transform data in a different way).

Training partners

- Anyscale

- SageMaker

- Run.ai

- NVIDIA

- Vertex AI

- Lambda

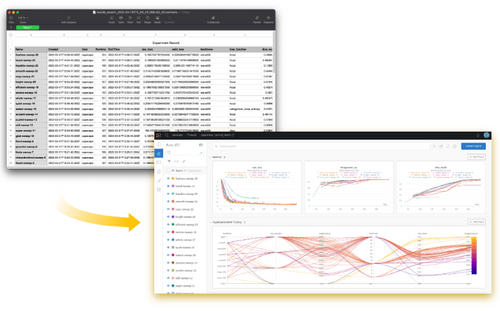

Training experiments can be easily logged in W&B for visualization & analysis

Decisions made in W&B are pushed to training environments for actions (e.g. train with different hyperparameters, change model architecture, retrain on different datasets)

Workflow partners

- Kubeflow

- Jenkins

- Airflow

- Github Actions

- Astronomer

Machine learning workload triggered by orchestration solutions can be easily logged in W&B for visualization and analysis.

Automation actions scheduled in W&B can be easily integrated into orchestration / CI/CD systems as part of the broader end-to-end workflow.

Production partners

- OctoML

- SageMaker

- Run.ai

- NVIDIA

- Lambda

Production model performance and data are easily logged in W&B for visualization and analysis.

Decisions made in W&B are pushed to production environments for actions (e.g. deploy model A to production, change model version from A to B)

Multi-cloud and flexible deployment

Weights & Biases offers unmatched cloud flexibility, with secure enterprise deployments via a managed SaaS cloud or dedicated private cloud.

We are available on all 3 major cloud marketplaces, with full integrations with AWS Sagemaker and GCP Vertex. Weights & Biases is also framework and library agnostic, working with popular libraries like Azure OpenAI, Optimized Pytorch and HuggingFace on cloud platforms.

Secure enterprise deployments

Your infrastructure of choice

Best-in-class compute hardware

Weights & Biases is a DGX-ready software provider and available for use with NVIDIA Base Command. Leverage the power of NVIDIA accelerated computing to build and deploy better models faster and supercharge your ML practice. We’re also constantly adding new integrations with NVIDIA, like the NVIDIA-developed toolkit NeMo for speech recognition.

Weights & Biases works with 30,000+ ML libraries and repos, including:

Become a partner

Channel partners

Channel partners work with Weights & Biases to gain expertise in our platform and to market and sell collaboratively.

Technology partners

Technology partners are independent software providers whose technology extends and enhances the Weights & Biases experience.

Solution partners

Solutions and consulting partners provide expertise and create industry-specific offerings, aligning clients with the benefits of Weights & Biases for ML initiatives.