For more information or if you need help retrieving your data, please contact Weights & Biases Customer Support at support@wandb.com

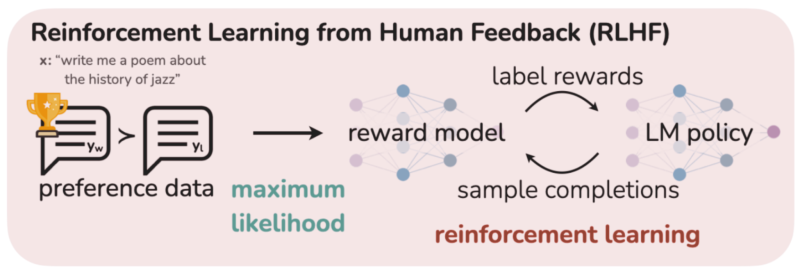

Reinforcement learning from human feedback (RLHF) is a machine learning approach that leverages human insights to train models, particularly large language models (LLMs), for better alignment with human preferences. Instead of just using pre-set reward functions, RLHF incorporates human input to construct a reward model that reflects human judgment. This model then facilitates further training of the main model through reinforcement learning, leading to more accurate and beneficial outputs.

Instead of relying purely on traditional supervised learning, where a model learns from labeled datasets, RLHF introduces direct human feedback during the training process. This feedback might take the form of human preference rankings, chosen versus rejected responses, or other signals that reflect how humans evaluate the quality or helpfulness of the AI’s outputs.

The primary benefit of RLHF is that it enables AI systems to align more closely with human goals, values, and expectations. By incorporating actual human preferences, models trained with RLHF can be made more helpful, less likely to generate harmful outputs, and overall more reliable when interacting in the real world.

RLHF is now widely used as a core technique for AI alignment in state-of-the-art chatbots and virtual assistants. This tutorial will guide you through the RLHF process, provide practical code examples, and show you how to use Weights & Biases for tracking experiments and visualizing model performance.

Reinforcement learning from human feedback is a machine learning technique where a model is trained to maximize a reward signal shaped by human preferences rather than a predefined manual reward function. In traditional reinforcement learning, the reward is set explicitly through rules or signals from the environment. Meanwhile, in RLHF, the reward signal comes from data representing what humans consider to be good, safe, or helpful behavior.

The RLHF process uses several components. Human evaluators are given pairs of outputs from the model and asked which one they prefer, or they may rank multiple outputs. Sound familiar?

The collected preference data is used to train a reward model. The reward model learns to assign higher scores to outputs that better match human preferences. This reward model then acts as a stand-in for human judgment during training.

After the reward model is trained, it is used in a reinforcement learning loop. The main model, such as a large language model for generative AI, tries to maximize the scores assigned by the reward model. This enables scalable and efficient training that remains centered on human values.

RLHF is especially important in generative AI and language models, where it can be difficult to capture what makes a response helpful, relevant, or safe using fixed rules. By training with data based on human feedback, RLHF enables models to produce outputs that are more appropriate, useful, and aligned with human expectations. This method is now widely used to help ensure that advanced AI systems better reflect human goals and values.

During the pretraining stage of building a language model, the main goal is to generate the most likely next word or token based on large datasets of existing text. While this allows the model to learn grammar, facts, and general language usage, it does not always result in behavior that is helpful, safe, or in line with human expectations. A model focused solely on likely continuations may produce bland, biased, or inappropriate responses that fail to account for real-world context or nuanced communication.

Reinforcement learning from human feedback addresses this limitation by introducing a reward signal based on human preferences. With RLHF, the model is fine-tuned to generate outputs that better match what people actually want from an AI system, going beyond what is merely statistically probable. This alignment ensures that the AI is not only producing logically correct sentences but is also helpful, responsible, and considerate of user intent.

One major significance of RLHF is its improvement of AI-human interaction. When a language model is trained with human feedback, it becomes more capable of generating responses that are relevant, polite, and contextually appropriate. This leads to more natural and satisfying conversations, whether the AI is being used in customer support, virtual assistants, or creative writing tools. RLHF also enables models to handle ambiguity and nuance in human communication, which are challenging to capture with rigid, rule-based systems.

In decision-making tasks, RLHF allows AI models to take into account complex human preferences that may not be easily expressed through standard metrics. By integrating human feedback into the training loop, language models and generative AI systems can better prioritize safety, fairness, and ethical considerations.

Overall, RLHF is a key method for aligning language models and generative AI with human goals and values. It helps ensure that advanced AI systems produce outputs that are useful, trustworthy, and respectful of the needs of the people who interact with them.

Reinforcement learning from human feedback is a multi-stage process that adapts language models to better align with human expectations and preferences. Here is how RLHF typically works in the context of large language models:

The process begins with data collection, where humans interact with the language model and provide demonstrations of ideal responses to various prompts or questions. This feedback is used to create a high-quality dataset consisting of prompt-response pairs. The language model is then trained using supervised fine-tuning on this data, allowing it to learn and mimic human-like answers as a strong starting point.

Next, the model generates multiple responses to a wide range of prompts. Human evaluators review these responses in pairs or sets and indicate which outputs they prefer. These preferences are collected and used to train a reward model, a separate neural network that learns to assign higher scores to responses more aligned with human feedback. This reward model serves as a proxy for direct human judgment, allowing the language model to be optimized in a scalable way.

After the reward model is established, the language model is further trained using reinforcement learning, typically with an algorithm called Proximal Policy Optimization. The main objective in this phase is to update the language model so it generates responses that the reward model, informed by human preferences, considers high-quality.

Reinforcement learning (RL) works by treating the language model as an agent that interacts with an environment (in this case, text generation based on prompts). For each prompt, the model generates a response, receives a reward score from the reward model, and updates its policy, the rules it uses to generate text, to increase the likelihood of producing high-reward responses in the future.

A fundamental technique in reinforcement learning is the policy gradient method. In this approach, the “policy” is the probability distribution over possible outputs given an input. Policy gradient algorithms optimize this policy directly by estimating the gradient of expected reward with respect to the model’s parameters. Put simply, the algorithm adjusts the model’s weights so that responses that yield higher rewards become more probable over time.

However, directly applying policy gradients can sometimes lead to unstable training or drastic changes in the model’s behavior, a problem known as “policy collapse.” Proximal policy optimization addresses this by introducing a constraint during updates. PPO ensures that the language model does not deviate too far from its previous behavior in a single update step. It does this by using a “clipped” objective function that limits the size of the policy update. This maintains a balance between making improvements and keeping the model stable and predictable.

During PPO training, the process is typically as follows:

By repeating this loop, PPO gradually steers the language model to generate outputs that better reflect human feedback while maintaining training stability and preventing undesirable, extreme behaviors. This makes PPO a widely used and effective algorithm for aligning large language models with complex human goals through RLHF.

This process of generating outputs, gathering human feedback, updating the reward model, and further optimizing the language model can be repeated to continually improve the model’s alignment with human values. By cycling through these steps, language models become more capable of producing outputs that are helpful, safe, and contextually appropriate.

In short, RLHF in language models involves collecting human feedback to supervise the initial fine-tuning, building a reward model based on human preferences, and utilizing advanced reinforcement learning techniques, such as PPO, to optimize the main model for human-aligned performance. This multi-step framework enables language models to better understand and reflect the values, needs, and intentions of people using them.

The reward model is a central component in reinforcement learning from human feedback. Its purpose is to estimate how well a language model’s output matches human preferences, based directly on human feedback rather than objective or rule-based criteria. Training a reward model is a structured process that relies on data collected from human evaluators.

To start, the language model is prompted to generate a variety of possible responses to a set of questions or inputs. Human annotators then review these outputs, usually comparing two or more at a time. They rank or select which responses they find more helpful, clear, polite, or accurate. This ranking process reflects the fine-grained preferences that are difficult to capture with automated rules alone.

These human rankings are used to create the training data for the reward model. Typically, the reward model is a neural network that takes a language model output and predicts a score representing how likely it is to be chosen by human evaluators. During the training phase, the reward model learns to assign higher scores to responses that humans prefer and lower scores to less desirable outputs. Rather than simply learning to classify responses as good or bad, the reward model utilizes relative rankings to enhance its predictions. Techniques like pairwise ranking loss help the model learn to consistently rank outputs in line with human judgments.

Once trained, the reward model offers a scalable and automated method for evaluating new language model outputs in accordance with human values. This allows the main language model to be optimized with reinforcement learning, using the reward model’s scores as a guide toward responses that are safer, more relevant, and better aligned with what people actually want in practice.

Later, we will use a pre-trained reward model that has already been trained on chosen and rejected responses from human annotators. This dataset enables us to efficiently train the reward model without requiring manual ranking from scratch, thereby making the process more accessible and reproducible as a tutorial.

To recap, I will go over each main step in the RLHF training process. This review will clarify how each stage helps shape and refine a language model to better match human values.

First, the language model is pretrained on a massive and diverse collection of human-written text. During this phase, the model learns core language elements such as grammar, basic reasoning, and world knowledge by trying to predict the next word in given sentences. This pretraining results in a model with strong general language skills, but it does not directly teach the model about specific human expectations or values.

After pretraining, the model is further refined in a supervised fine-tuning step. Human annotators provide ideal or high-quality responses to a variety of prompts. The language model is then trained to mimic these specific outputs. This stage helps transition the model from generic text generation to producing responses that people genuinely want and find helpful.

Next, the training process focuses on the reward model. The language model generates several possible responses to prompts, and human annotators compare these responses and indicate which they prefer. The reward model learns to assign higher scores to outputs that are more likely to be chosen by humans. This model makes it possible to later automate the evaluation of outputs using human preferences as the guiding standard.

In the final step, the language model is improved using reinforcement learning, typically with algorithms such as Proximal Policy Optimization. The model produces responses, the reward model assigns a score to each response, and the language model updates its parameters to maximize these scores. Through repeated cycles of this process, the language model becomes more adept at generating outputs that align with human values, such as clarity, helpfulness, and safety.

Each stage in RLHF pre-training, supervised fine-tuning, reward model training, and reinforcement learning works together to build a language model that is not only knowledgeable but also aligned with what real users expect and prefer.

RLHF represents a major step forward in making language models more useful, trustworthy, and responsive to what people actually value. Rather than relying solely on static datasets or hard-coded rules, RLHF incorporates continual human feedback into the training loop, allowing us to shape models toward outcomes that truly matter to us. As models become more deeply integrated into our lives and decision-making, these alignment techniques will be essential for ensuring that AI systems remain helpful, responsible, and aligned with user needs.

While RLHF is not a complete solution to all challenges in AI alignment, it is one of the most practical and powerful tools we have today for bridging the gap between raw prediction and meaningful, human-centered behavior in AI.