Weights & Biases on NVIDIA

Accelerate AI development with Weights & Biases on the NVIDIA platform. Build, evaluate, and deploy cutting-edge AI applications while maintaining complete control over your models and data. Combine NVIDIA’s industry-leading GPU architecture and Generative AI software with Weights & Biases’ comprehensive MLOps and LLMOps capabilities to drive innovation at scale.

Trusted by the world's leading companies

The importance of the methods and the processes and the tools, that is so vital. What could be described as MLOPs, so vital – which is one of the reasons why your tools are so popular. It’s really complicated stuff to manage that workflow in a productive way and transform that raw material into an output that is a neural network or otherwise intelligence-at-scale, is quite a significant process.

Jensen Huang

CEO, NVIDIA

Accelerate AI development with Weights & Biases on NVIDIA

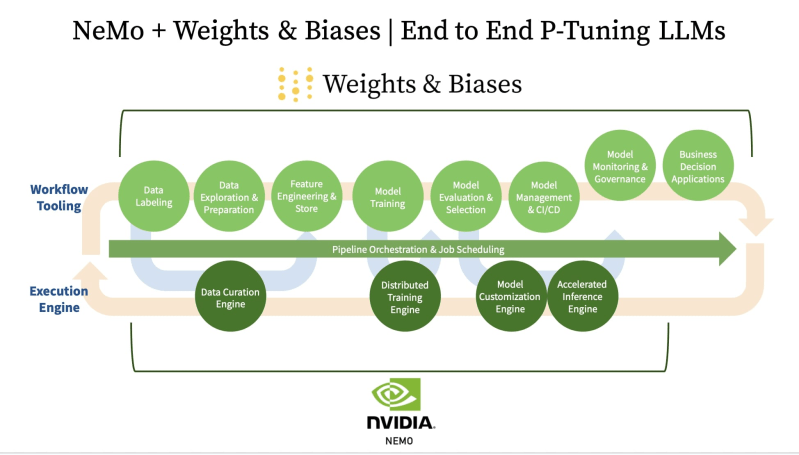

Weights & Biases is an NVIDIA DGX-ready software partner and NVIDIA AI Enterprise Validated software partner, enabling seamless integration with NVIDIA’s AI Enterprise software stack. The integration between Weights & Biases and NVIDIA’s frameworks like MONAI for medical imaging and BioNeMo for life sciences enables organizations to accelerate their AI development while maintaining complete visibility into their training processes. Weights & Biases also supports the full suite of NVIDIA NIM and NeMo Microservices, providing all the tools needed to build, debug, and evaluate, and productionize Generative AI powered applications.

Why Weights & Biases on NVIDIA

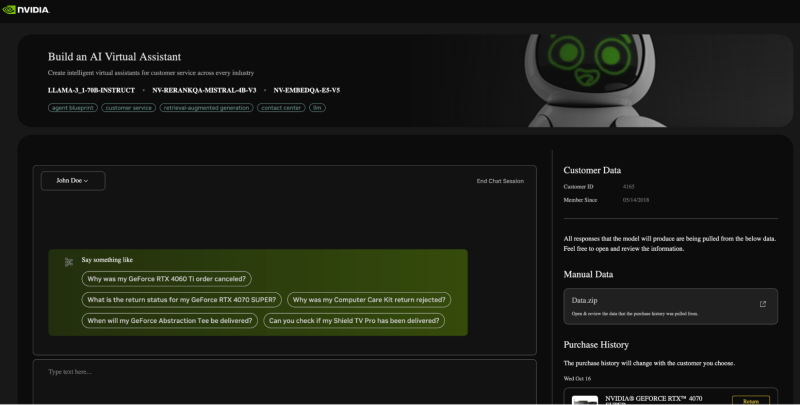

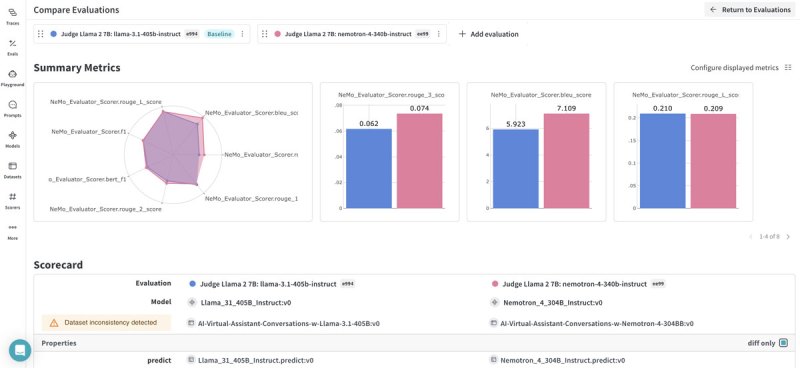

Build Production GenAI Applications

Leverage W&B Weave to debug and evaluate applications powered by NVIDIA NIMs. W&B Models integrates seamlessly with NVIDIA NIM and the broader NeMo Microservices suite.

Accelerated model development

Maximize GPU utilization and optimize training workflows with comprehensive experiment tracking on NVIDIA DGX systems. Monitor resource consumption and training metrics in real-time.

Unified system of record

Create a centralized repository for all AI assets with full model and data lineage tracking. Maintain complete visibility across your entire ML workflow while keeping control of your infrastructure.

Flexible deployments

Maintain data sovereignty and AI ownership while leveraging NVIDIA’s enterprise-grade infrastructure. Implement robust security and governance for on-premises deployments.

How it works

Features

Deep NVIDIA integration

Deploy Weights & Biases with NVIDIA infrastructure to benefit from native integrations with NVIDIA AI Enterprise software stack, DGX systems, and frameworks like MONAI and BioNeMo. Track GPU utilization and optimize resource consumption across your AI lifecycle.

Enterprise AI development

Leverage NVIDIA AI Enterprise with over 50 pretrained models and frameworks. Scale your training workflows efficiently while maintaining complete visibility of your development pipeline through Weights & Biases’ enterprise-grade tracking capabilities.

Advanced model development

Maximize GPU utilization on DGX systems with comprehensive experiment tracking and real-time monitoring. Scale your training workflows efficiently NVIDIA-optimized resource allocation.

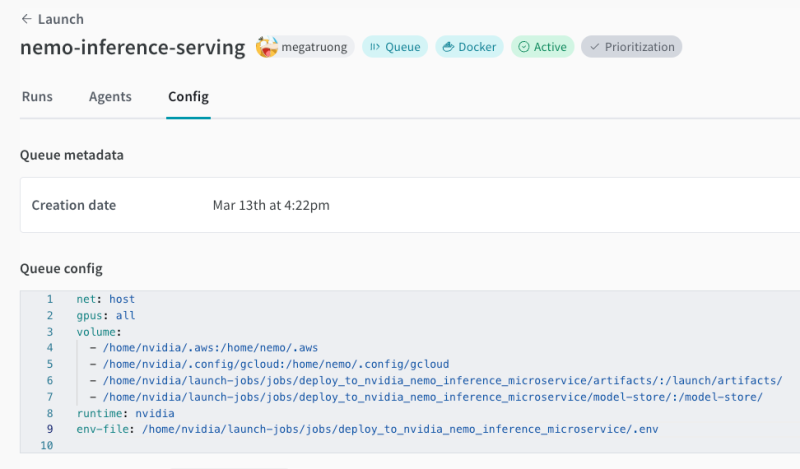

Optimized model inference

Seamlessly deploy models using NVIDIA Inference Microservice (NIM) with built-in optimization for NVIDIA Triton Inference Server. Deploy anywhere – on-premises or multi-cloud – while maintaining consistent performance.

Flexible deployment options / Enterprise security and governance

Implement robust security controls for private cloud or on-premises DGX deployments. Maintain complete data sovereignty and AI asset ownership while leveraging NVIDIA’s enterprise-grade infrastructure.

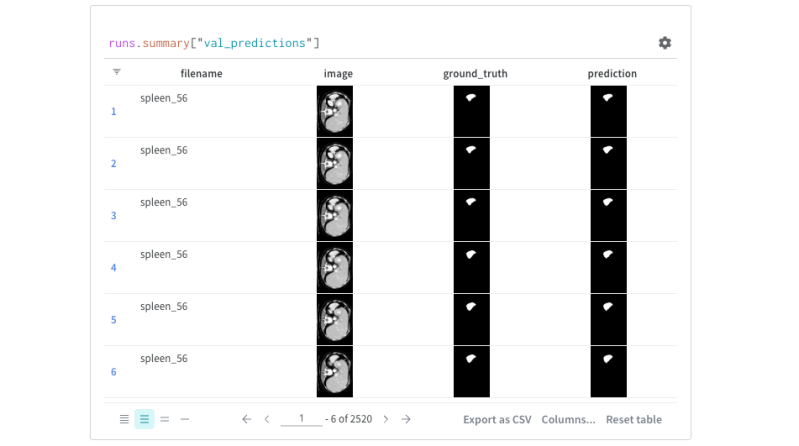

Healthcare & Life Sciences AI development

Accelerate medical imaging research with MONAI integration and advance protein research through the BioNeMo framework. Track metrics, visualize results, and collaborate on healthcare AI projects with W&B Models while maintaining complete experiment history.

Low-code model development

Streamline production-quality AI development using NVIDIA TAO Toolkit integration. Track fine-tuning experiments of pretrained models, monitor convergence, and evaluate performance through W&B’s comprehensive visualization tools.

Collaborative development

Enable seamless knowledge sharing with shared workspaces and interactive visualization tools and NVIDIA AI Enterprise software validated. Foster innovation across AI teams while maintaining comprehensive tracking of all NVIDIA resources.

Model registry and versioning

Centralize model and dataset management with full versioning and lineage tracking. Ensure reproducibility throughout the model lifecycle while leveraging NVIDIA’s scalable infrastructure.

Resource optimization

Monitor and optimize GPU utilization across your entire AI infrastructure. Make data-driven decisions about resource allocation and scaling to maximize the value of your NVIDIA investment.

Woven by Toyota / Toyota Research Institute leverages NVIDIA DGX infrastructure with Weights & Biases to accelerate autonomous vehicle research and development.

Invitae, one of the fastest-growing genetic testing companies in the world, runs rapid AI experiments to bring the power of genetic insights to everyone.

John Deere subsidiary, Blue River Technologies, leverages Weights & Biases and NVIDIA Jetson to accelerate building computer vision models for weed detection for targeted crop spraying.