AGENT REINFORCEMENT TRAINER (ART)

Train, evaluate, and iterate on LLM agents in hours, not weeks

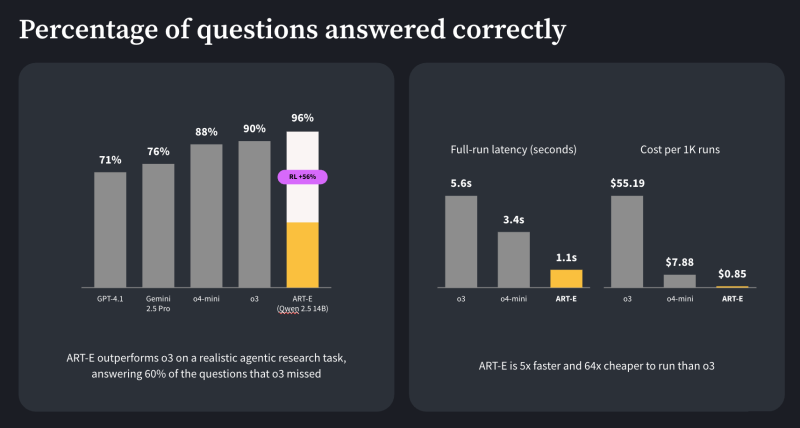

Agent Reinforcement Trainer (ART) is an open-source framework for training agentic LLMs to improve performance and reliability through experience. It provides a lightweight interface to reinforcement learning (RL) algorithms such as Group Relative Policy Optimization (GRPO), boosting model quality while minimizing training costs. ART integrates with W&B Training Serverless RL, eliminating provisioning and management overhead and providing instant access to elastic GPU capacity.

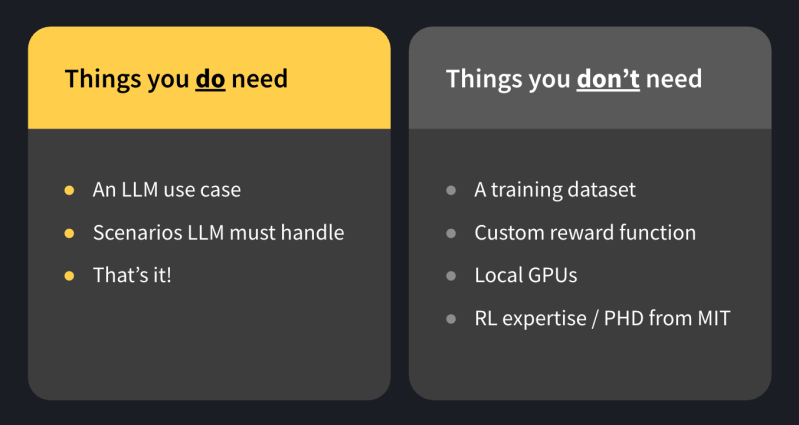

RL made accessible to everyone

Reinforcement learning is still early, and the algorithms are intricate. Small, nuanced mistakes can prevent convergence or cause instability. ART abstracts the complexity behind a developer-friendly API, so you can train models without wrestling with fragile scripts or algorithmic details.

Why ART?

Built for real-world agents

Real user interactions are multi-turn. ART supports multi-turn rollouts so your agent learns from realistic conversations and performs reliably.

Drop‑in integration

OpenAI‑compatible chat endpoint slots straight into your existing code or frameworks like CrewAI, OpenAI Agents SDK, and LangGraph.

Works with existing code

ART provides wrappers to plug RL training into existing apps and abstracts the training server into a modular service your code needn’t touch.

Integrated observability

ART provides built-in integrations with popular observability tools, including Weights & Biases and Langfuse for debugging, monitoring, and reproducibility.