SERVERLESS SFT

Train large language models on new tasks alongside RL

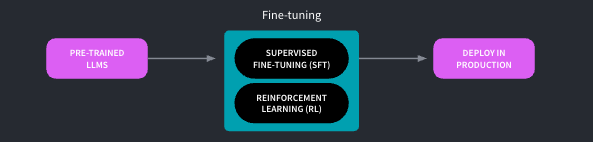

LLMs are strong next-token predictors, but need fine-tuning to perform agentic tasks. Serverless Supervised Fine-Tuning (SFT) lets you teach LLMs specific tasks alongside reinforcement learning (RL) in a unified workflow. With Serverless SFT built into the W&B Training workflow, you can switch quickly between SFT and RL without shuttling artifacts across systems.

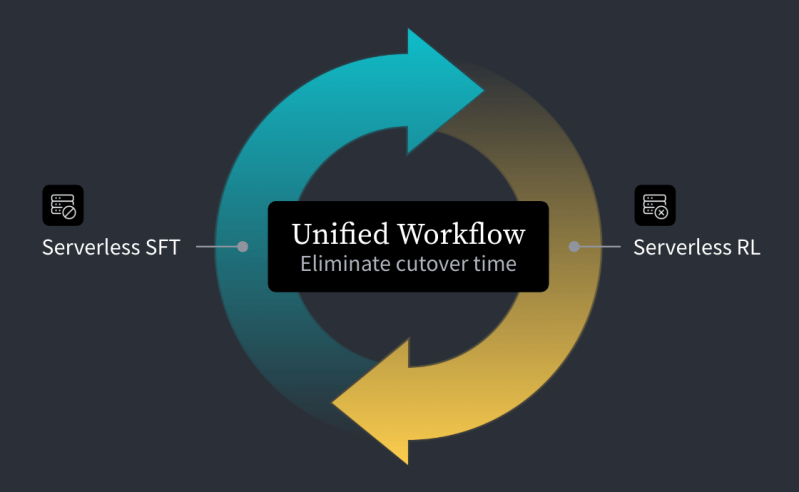

Integrated post-training workflow

Beyond supervised fine-tuning, you may need RL post-training to improve agent reliability, but that often requires moving artifacts between SFT and RL jobs, slowing down iteration. W&B Training provides an integrated post-training workflow via the Agent Reinforcement Trainer (ART) API, so you can switch quickly between SFT and RL as often as needed and iterate faster.

We handle the infrastructure for you

Serverless SFT reduces time to start fine-tuning from weeks to hours by making GPU capacity available as infrastructure-as-a-service (IaaS).

Write and control the training loop locally, while we handle distributed training.

Faster time to market

Serverless SFT provides on-demand GPU capacity on the CoreWeave cloud as a serverless backend for the Agent Reinforcement Trainer (ART) API, eliminating the need to procure, configure, and manage GPU clusters.

Zero infrastructure headaches

Serverless SFT fully manages distributed training and delivers high utilization across fine-tuning jobs. It also auto-scales to match workload demand while maximizing efficiency