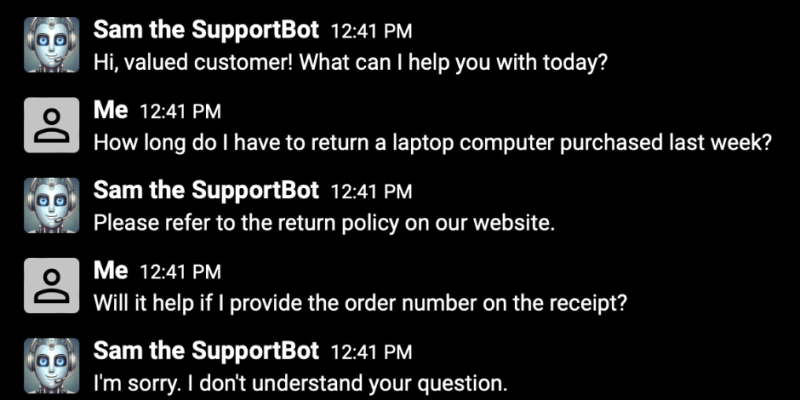

Trusted AI agents and applications require rigorous evaluations

Deploy your AI systems with confidence

Evaluations measure the performance of AI agents and applications across multiple dimensions, including quality, latency, cost, and safety. Using a combination of benchmark tests, real-world scenarios, and comparative analysis, evaluations assess an AI application’s predictability and proficiency in problem-solving and task execution. The results reveal how well it performs and where it needs improvement.

Benefits of using evaluations

Go beyond “vibe checks” and gain necessary visibility and insight into your AI agent or application behavior.

Protect your brand reputation by catching and correcting bias, errors, or inconsistencies before they reach your customers.

Analyze and optimize your AI agents and applications to ensure enterprise-grade reliability and scalability.

How to evaluate your AI applications with Weave

Scorers

Weave Scorers evaluate AI inputs and outputs and return evaluation metrics.

Datasets

Weave Datasets help you to organize, collect, track, and version examples for agentic workflow evaluation for easy comparison.

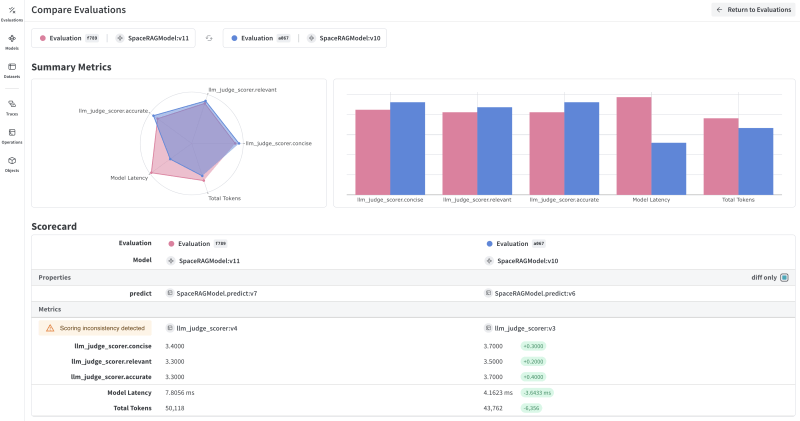

Comparisons

Weave Comparisons allow you to visually compare and diff code, traces, prompts, models, and model configurations.

Trusted by the leading teams across industries—from financial institutions to eCommerce giants

Socure, a graph-defined identity verification platform, uses Weights & Biases to streamline its machine learning initiatives, keeping everyone’s wallets a little more secure.

Qualtrics, a leading experience management company, uses machine learning and Weights & Biases to improve sentiment detection models that identify gaps in their customers’ business and areas for growth.

Invitae, one of the fastest-growing genetic testing companies in the world, use Weights & Biases for medical record comprehension leading to a better understanding of disease trajectories and predictive risk

The Weights & Biases end-to-end AI developer platform

Weave

Models

The Weights & Biases platform helps you streamline your workflow from end to end

Models

Experiments

Track and visualize your ML experiments

Sweeps

Optimize your hyperparameters

Registry

Publish and share your ML models and datasets

Automations

Trigger workflows automatically

Weave

Traces

Explore and

debug LLMs

Evaluations

Rigorous evaluations of GenAI applications